Imagine this; your company’s HR manager receives a follow-up call from a job applicant. The voice is familiar and confident and matches a video interview recorded earlier in the week. Everything seems routine until weeks later, when it’s revealed that the “candidate” was an imposter powered by deepfake audio, and the systems they accessed were quietly leaking data abroad.

This isn’t science fiction. In 2025, the dangers of deepfake technology are defined as voice cloning and vishing (voice phishing), which are no longer niche concerns; they are mainstream cyber threats. Organizations are struggling to adapt to a world in which seeing and hearing can no longer be trusted as reliable sources of truth.

The Rise of Deepfake Vishing in Real-World Attacks

On June 30, 2025, the U.S. Department of Justice revealed a massive espionage operation: North Korean operatives had infiltrated over 100 American companies by posing as remote IT workers. Their success hinged on a powerful set of tools: stolen U.S. identities, falsified resumes, and deepfake-enhanced interviews with cloned voices and faces.

These remote “employees” worked unnoticed for months, earning legitimate income and gaining internal access to sensitive networks. In some cases, they were involved in software development, IT administration, or help desk roles with direct access to infrastructure.

This is just one high-profile example of how AI-generated media is being weaponized to bypass hiring controls, impersonate executives, and exploit human trust.

What Is Deepfake Vishing?

Deepfake vishing is a technique where attackers use AI-generated voices to impersonate trusted individuals over the phone, during video calls, or via voice messages.

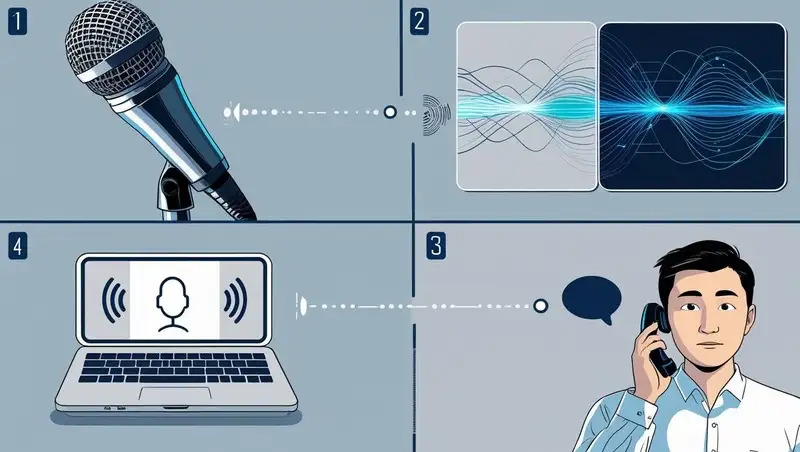

How It Works:

1. Voice Sample Collection: Attackers collect public or leaked audio (e.g., from webinars, podcasts, or YouTube).

2. Model Training: AI tools like ElevenLabs, Resemble.ai, or open-source voice synthesis platforms generate a model of the person’s voice.

3. Real-Time Cloning: Attackers use this voice model in real-time or to pre-record convincing audio messages.

4. Social Engineering: The voice is used to impersonate a company executive, IT technician, or job applicant to extract credentials, access, or money.

Unlike traditional phishing emails, deepfake vishing bypasses written filters and exploits voice-based trust, especially over the phone or in conference calls.

Deepfake Technology Is Now accessible.

What makes this trend so dangerous in 2025 is the widespread accessibility of deepfake creation tools. Just five years ago, cloning someone’s voice required expensive computing resources and hours of source audio.

Today, anyone with a one-minute voice sample can produce a convincing fake using cloud-based tools. Some services even allow real-time voice modulation over Zoom, Skype, or phone.

These tools have legitimate uses: voiceovers, accessibility, and entertainment. But cybercriminals have quickly adapted them for fraud, espionage, and extortion.

Who’s Being Targeted?

1. Human Resources & Talent Acquisition

- Attackers use fake video interviews and voice calls to land remote jobs using false identities.

- Common targets include IT support, software development, and cloud administration roles that require access but little in-person verification.

2. Finance and Accounting

- Voice clones are used to impersonate CFOs or VPs, requesting wire transfers or changes to vendor payment details.

- These attacks are increasingly sophisticated, sometimes referencing internal project names or deal timelines.

3. Help Desks and Support Lines

- Attackers call internal IT support lines while impersonating employees locked out of accounts.

- They use cloned voices to trick staff into resetting MFA tokens or revealing credentials.

4. Executives and Public Figures

- Publicly available speeches, interviews, and videos make CEOs, boardmembers, and professors especially vulnerable to voice cloning.

- The Columbia University breach, while not confirmed as deepfake-related, has reignited concerns around impersonation in academic institutions.

Psychological Advantage: Why Voice Attacks Work

People naturally trust voices. Voice recognition is one of the oldest forms of interpersonal identification; it’s quick, subconscious, and emotionally loaded.

Attackers exploit this by:

- Triggering urgency: “We’re about to lose the client; approve the transfer now.”

- Faking familiarity: “Hey, it’s Tom from the Boston office. I forgot my password again.”

- Projecting authority: “This is Dr. Warner. Could you please override the security profile on Room 307?

Traditional phishing requires spelling tricks and visual deception. Voice-based deepfakes short-circuit our skepticism by mimicking tone, cadence, and vocal mannerisms.

Deepfake Detection: What’s Working (and What’s Not)

Detecting deepfakes in voice is significantly harder than in video. Most people cannot distinguish a cloned voice from a real one without specialized tools.

Current Detection Strategies:

1. Behavioral Biometrics: Analyze vocal rhythm, stress patterns, and pauses that AI struggles to mimic. This method works best when it is combined with continuous authentication systems.

2. Liveness Testing: Challenges users with real-time interactions that are hard for prerecorded or synthetic voices to pass.

3. Voiceprint Authentication: Uses biometric markers to match a user’s voice with an enrolled template. Effective but requires clean samples and cannot stop impersonation if the attacker has physical access to the account.

4. Audio Forensics: Post-incident tools analyze waveform anomalies, compression signatures, and artifacts to identify manipulation.

Investigations can benefit from these tools, but they don’t serve as a preventative measure.

Prevention: Building Deepfake Resilience

No single tool can prevent deepfake phishing. Instead, organizations must implement multi-layered defense strategies that include technology, training, and process design.

1. Awareness Training

- Train employees to verify unusual voice requests using a second channel (Slack, SMS, in person).

- Incorporate deepfake simulations into security awareness programs.

2. Multi-Channel Authentication

- Don’t rely on voice alone for approvals, transfers, or password resets.

- Use secure apps, encrypted chat, or token-based verification.

3. Job Candidate Verification

- Conduct in-person or secure biometric onboarding for remote hires.

- Use government-issued identity verification services before granting system access.

4. Help Desk Protocol Reinforcement

- Enforce strong caller verification protocols, including employee ID questions, callback policies, and time-delayed actions for sensitive changes.

5. Executive Threat Modeling

- Build impersonation scenarios into executive security protocols.

- Limit the availability of executives’ full-length voice recordings in public forums.

Dangers of Deepfake Technology and Threat Scenarios

Healthcare

Hospitals and health tech companies are experimenting with virtual care and AI-driven diagnostics, which could potentially lead to voice-based prescription fraud or physician impersonation during remote consultations. A deepfake audio command could trigger unauthorized medical orders or patient data exposure.

Education

Universities with open digital lectures and administrative livestreams are a goldmine for voice sample harvesting. Combined with internal access, attackers could impersonate faculty or administrators to manipulate student records, payroll data, or academic transcripts.

Financial Services

We’ve already seen attackers impersonate CFOs to redirect wire transfers. However, attackers are targeting even customer service departments, posing as “confused customers” in an attempt to circumvent standard KYC protocols.

Regulatory Landscape: Are Laws Catching Up?

One of the greatest challenges in combating deepfake-enabled cybercrime is the lack of legal clarity and enforcement. While countries like the U.S. have made progress in prosecuting cybercriminals using existing fraud and identity theft laws, specific deepfake legislation remains limited.

As of mid-2025, only a few U.S. states (e.g., California, Texas, and Virginia) have enacted laws directly addressing synthetic media, and these laws mostly focus on political misinformation or non-consensual adult content. They don’t fully account for deepfake impersonation in corporate environments, especially in cross-border or B2B contexts.

The Federal Trade Commission (FTC) has recently announced a push to investigate synthetic media’s use in scams, and the Cybersecurity and Infrastructure Security Agency (CISA) is expected to issue new guidance for combating AI-based threats in the workplace.

Right now, the European Union’s AI Act has some early rules that require clear labeling for synthetic audio and video, which might eventually make platforms that provide deepfake tools add visible watermarks or reveal that the content is synthetic. But for now, enforcement mechanisms are sparse.

Conclusion: Regulation is lagging, and until laws catch up, responsibility rests heavily on companies and individuals to build defensive awareness and technical safeguards.

Future Threat: AI + Deepfake Automation

Security experts warn that future attacks may combine:

- Large Language Models (LLMs) that generate believable scripts,

- Real-time voice modulation, and

- The technology also includes synthetic video avatars that mimic facial expressions.

With this combination, a single attacker could automate hundreds of convincing calls per day, targeting sales teams, IT admins, and executives across industries.

This evolution may render traditional social engineering defenses obsolete unless organizations invest in continuous identity assurance systems and AI-powered threat detection.

What CISOs, Recruiters, and IT Teams Should Do now?

- Map out voice-based attack scenarios relevant to your industry.

- Audit hiring pipelines and remote onboarding workflows for identity verification gaps.

- Limit access to internal systems until thorough employee validation is complete.

- Incorporate deepfake resilience into your incident response plans.

- Advocate for legal and policy frameworks to define and criminalize malicious deepfake use.

Final Thoughts: Rebuilding Trust in Human Communication

In 2025, we’ve entered a new phase of cyberwarfare, one where trust in voice, image, and even identity is easily manipulated by machines. Deepfake phishing exploits our tendency to believe what we hear, not just technology.

Organizations must learn to verify, not just listen. And cybersecurity must evolve from protecting data to protecting human communication itself.

If you are not preparing for the impact of deepfakes, you are already at a disadvantage.

Resources

Stanford University

Nevada University