Social engineering attacks have long been a primary method for cybercriminals to access confidential information. Traditionally, these attacks relied on exploiting human psychology rather than technological vulnerabilities. Today, however, the tactics behind these attacks are evolving rapidly, primarily driven by artificial intelligence (AI) advances. This blog will explore how AI transforms social engineering attacks, why these changes matter for your business, and the strategies you can adopt to mitigate these emerging threats.

Introduction

Social engineering manipulates individuals into divulging confidential information or granting unauthorized access to systems. Although the underlying objective remains consistent—to exploit human trust—there has been a dramatic shift in the methods employed. Gone are the days when simple tricks sufficed. Now, AI is at the forefront, enabling cybercriminals to execute more sophisticated, faster, and harder-to-detect attacks. As businesses become increasingly digitized, understanding this evolution and preparing for its implications has never been more crucial.

The Evolution of Social Engineering Attacks

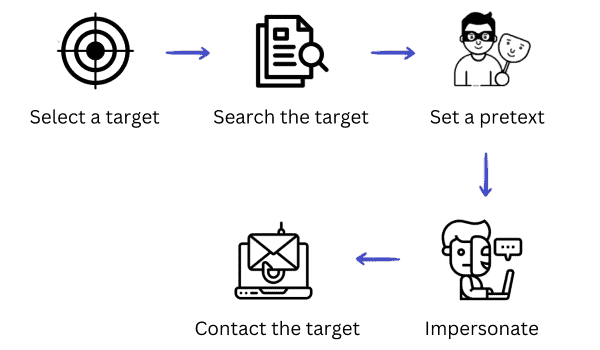

Historically, social engineering attacks relied on rudimentary techniques that preyed on human error and trust. These methods often involved straightforward impersonation or poorly executed phishing scams that many individuals could eventually learn to recognize. However, integrating AI into these tactics has dramatically altered the landscape.

AI-driven tools have enabled attackers to create hyper-realistic simulations of legitimate communication, from videos and audio to emails. By leveraging machine learning algorithms, cybercriminals can now produce content that is not only convincing but also highly personalized, making it difficult for even the most cautious individuals to discern authenticity from deception.

Impersonation Attacks: From Silicone Masks to Deepfakes

Traditional Impersonation Tactics

In the past, impersonation attacks involved physical disguises or other low-tech deceptions. For example, fraudsters have used silicone masks and crafted fake identities to impersonate trusted figures. One notorious case involved individuals posing as high-ranking officials, such as a French government minister, during video calls. These impersonators succeeded in scamming victims out of significant sums by exploiting the trust that the visual medium typically engenders.

The Advent of Video Deepfakes

Today, the landscape of impersonation attacks has changed dramatically with the advent of video deepfakes. Using advanced AI algorithms, attackers can create videos that depict people saying or doing things they never actually did. A striking example occurred in Hong Kong, where a deepfake video of a chief financial officer (CFO) was used to trick a colleague into transferring $25 million. The deepfake technology makes it incredibly challenging to distinguish between genuine and fabricated video content, thereby increasing the risk of fraud.

The ability of deepfakes to mimic real-life interactions with startling accuracy means that traditional verification methods, such as video conferencing or even in-person meetings, may no longer be sufficient to establish trust. As this technology becomes more accessible, businesses must know that visual authenticity does not guarantee legitimacy.

Voice Phishing: From Traditional Vishing to AI-Enhanced Cloning

Traditional Voice Phishing Techniques

Voice phishing, or “vishing,” has long been a staple in the cybercriminal’s toolkit. Traditionally, attackers would make fraudulent phone calls, pretending to be someone in authority—often a senior executive or a representative from a trusted institution. The goal was to pressure the victim into providing sensitive information or authorizing financial transactions without proper verification.

The Transformation with AI-Enhanced Voice Cloning

The emergence of AI-enhanced voice cloning has revolutionized the vishing landscape. Modern AI systems can replicate a person’s voice using only a few seconds of recorded audio. This capability means that attackers can now simulate the voice of someone you know—a trusted colleague, friend, or family member. Imagine receiving a phone call that sounds exactly like your boss, urging you to complete a sensitive transaction immediately. This scenario is not far from reality, as there have been documented cases where AI-generated voice clones were used to manipulate victims into transferring substantial amounts of money.

The realism achieved by AI in voice cloning makes it increasingly difficult for individuals to detect deception. Even if an individual is aware of the possibility of a scam, the urgency and authenticity projected by the cloned voice can override caution, leading to potentially disastrous outcomes for both personal and business finances.

The Changing Landscape of Phishing Emails

The Old Approach to Phishing Emails

Phishing emails have been a persistent threat in the cybersecurity world for decades. In the early days, these emails were often characterized by glaring signs of inauthenticity—poor grammar, generic greetings, and outlandish offers such as lottery wins. As a result, many recipients learned to recognize and ignore these fraudulent messages.

AI-Driven Personalization in Phishing

Integrating AI into phishing email campaigns has marked a significant shift in their sophistication. Today’s AI-driven phishing emails are meticulously crafted to mirror legitimate communication’s tone, style, and formatting from trusted sources. These emails can be highly personalized, incorporating details that make them seem authentic and relevant to the recipient.

For example, an AI might analyze a target’s social media profiles or previous email interactions to tailor a phishing message that appears to come from a known contact. This level of personalization increases the attack’s success rate and undermines traditional phishing indicators, making it much more challenging for individuals and automated filters to detect fraudulent emails.

The Broader Impact of AI on Social Engineering

The implications of AI-powered social engineering attacks extend far beyond the immediate risk of financial loss or data breaches. The sheer speed and scale at which these attacks can be deployed mean that even well-trained individuals are at risk of being caught off guard. AI technology empowers cybercriminals to generate thousands of personalized attack vectors in a fraction of the time it would take to craft them manually.

Speed and Scale

One of the most alarming aspects of AI-driven attacks is their ability to operate at an unprecedented scale. AI algorithms can instantly produce and distribute many tailored phishing emails or impersonation videos. This rapid deployment can overwhelm even the most robust security systems, allowing attackers to hit multiple targets simultaneously before defenses can adapt.

Unparalleled Realism

The hyper-realism afforded by AI—whether through deepfake videos or cloned voices—poses a significant challenge for traditional verification methods. When the fake is indistinguishable from the real, individuals are more likely to trust the information presented, increasing the likelihood of successful fraud. This reality necessitates rethinking trust protocols in personal and professional communications.

Global Reach

Another critical factor is the global reach of AI-powered attacks. Modern AI tools can generate content in multiple languages, enabling attackers to target a global audience. This means regions with less exposure to traditional phishing tactics or fewer cybersecurity resources are becoming prime targets. The international scope of these attacks further complicates efforts to mitigate risks and underscores the need for a coordinated, worldwide response to cybersecurity threats.

Strategies to Combat AI-Powered Social Engineering

In the face of these rapidly evolving threats, businesses must adopt a proactive and comprehensive approach to cybersecurity. Here are several key strategies to help mitigate the risks associated with AI-powered social engineering attacks:

Strengthening Employee Awareness and Training

The human element remains one of the most critical factors in preventing social engineering attacks. Regular training sessions and simulations can help employees recognize and respond to the sophisticated tactics employed by modern cybercriminals. By exposing staff to realistic scenarios, organizations can foster a culture of vigilance and ensure that employees are equipped with the knowledge to question suspicious communications.

Employee training should emphasize the importance of skepticism when receiving unexpected requests through email, phone, or video calls. Encouraging employees to verify the authenticity of such communications through known and trusted channels is essential. As attackers become more adept at mimicking legitimate sources, ongoing education will be critical to keeping teams informed of the latest tactics.

Enhancing Verification Processes

Given the sophistication of AI-powered attacks, relying solely on conventional verification methods is no longer sufficient. Businesses need to implement enhanced verification processes for sensitive communications and financial transactions. This could involve multi-factor authentication, secure callback procedures, or even biometric verification in specific contexts.

For instance, if a request for a significant financial transaction is received via email or phone, it should be verified through a secondary, independent channel. This extra layer of scrutiny can help ensure that even if an attacker manages to spoof a trusted contact, their efforts will be thwarted by an established verification process.

Investing in Advanced Cybersecurity Tools

The rapid evolution of AI technology means that cybersecurity defenses must also adapt. Modern cybersecurity solutions increasingly incorporate AI and machine learning to detect anomalies and identify potential threats before they can inflict damage. These tools can analyze vast amounts of data to recognize patterns indicative of social engineering attacks, such as unusual email behavior or atypical communication requests.

Investing in such advanced security systems can provide organizations a proactive defense against emerging threats. By leveraging the same technology that cyber criminals use, businesses can stay one step ahead, intercepting and neutralizing threats in real-time.

Preparing for a Global Threat Environment

The global nature of AI-powered social engineering attacks necessitates a broader perspective on cybersecurity. Organizations should not only focus on internal defenses but also consider how to protect their external communications and interactions with international partners. This might involve collaborating with industry peers, participating in information-sharing networks, and staying abreast of global cybersecurity trends.

As attackers become more adept at exploiting vulnerabilities across borders, businesses must adopt a holistic approach encompassing local and international dimensions. This comprehensive strategy will be crucial in mitigating the wide-ranging risks posed by these sophisticated attacks.

Building a Culture of Cyber Vigilance

Creating a robust defense against AI-powered social engineering attacks involves more than just technical measures; it requires building a culture of cyber vigilance throughout the organization. This means fostering an environment where every employee understands the importance of cybersecurity and feels empowered to question and verify unusual requests.

Regular updates on emerging threats, open communication channels with IT and security teams, and a clear protocol for reporting suspicious activities can significantly reduce the risk of a successful attack. A culture of cyber vigilance not only strengthens internal defenses but also enhances the organization’s overall resilience in the face of evolving cyber threats.

The Future of Social Engineering and Cybersecurity

As AI continues to evolve, so will cybercriminals’ tactics. The intersection of AI and social engineering represents both a challenge and an opportunity for cybersecurity professionals. On one hand, the very technology that enables sophisticated attacks can also be harnessed to create more effective defense mechanisms. On the other hand, these attacks’ increasing realism and scale mean that businesses must remain agile and vigilant.

In the future, we will likely see even greater AI integration in offensive and defensive cybersecurity strategies. This dynamic landscape will require continuous learning, adaptation, and innovation. Organizations that invest in advanced technologies, foster a culture of awareness, and proactively update their security protocols will be best positioned to defend against tomorrow’s threats.

Conclusion

AI is fundamentally reshaping the landscape of social engineering attacks. From hyper-realistic deepfake videos and AI-enhanced voice cloning to personalized phishing emails, the techniques employed by cybercriminals are more convincing and scalable than ever before. The implications for businesses are clear: traditional security measures and employee training programs must evolve to keep pace with these sophisticated threats.

Organizations must focus on several key areas to combat the risks associated with AI-powered social engineering. Enhancing employee training and awareness, implementing robust verification processes, investing in advanced cybersecurity tools, and adopting a global perspective on threat mitigation are all critical components of a comprehensive defense strategy. By taking these proactive steps, businesses can protect their sensitive information and build a resilient framework capable of withstanding cyber adversaries’ evolving tactics.

In a world where the line between reality and fabrication is increasingly blurred, staying informed and vigilant is paramount. Cybersecurity is no longer solely the responsibility of the IT department; it is a collective effort that involves every member of an organization. Regularly updating your cybersecurity training to address AI-powered threats and maintaining an open dialogue about potential risks is essential in today’s digital age.

Preparation and adaptation are the keys to successfully defending against AI-driven social engineering attacks. As technology advances, so must our defenses. By understanding the evolving nature of these threats and implementing robust, proactive measures, businesses can protect themselves from the financial and reputational damage that cybercriminals seek to inflict.

At Hoplon InfoSec, we are committed to helping organizations navigate this complex and ever-changing cybersecurity landscape. We encourage you to assess your security protocols and update your training programs to include the latest insights on AI-powered social engineering attacks. Staying informed, staying vigilant, and continuously adapting your defenses will be critical to ensuring your business remains secure in the face of these modern dangers.

Have you updated your cybersecurity training to include AI-powered threats? We invite you to share your thoughts and experiences, and if you require further assistance, please do not hesitate to contact us. Together, we can build a safer digital future where innovation and security go hand in hand.