A recent investigation by Cybernews has uncovered a troubling and pervasive issue within the Apple iOS ecosystem—a significant number of applications are leaking sensitive secrets directly through their code. This report, based on an analysis of 156,000 iOS apps (about 8% of the App Store), revealed that over 71% of them contain hardcoded credentials. These aren’t just minor flaws; they include access keys to cloud storage, API tokens, database credentials, and other secrets that can be exploited by malicious actors.

In an era where digital privacy is more crucial than ever, this discovery serves as a wake-up call for developers, businesses, and users alike. This blog post will explore the full extent of the problem, what it means for the future of app security, and how we can respond effectively.

What Are Hardcoded Secrets?

Hardcoded secrets are sensitive pieces of data—like API keys, passwords, OAuth tokens, or encryption keys—that are directly embedded in a software application’s source code. In the case of iOS apps, this means that these secrets are part of the application bundle that gets distributed through the App Store. Anyone with basic reverse engineering skills can extract them.

Why is this dangerous? Because these secrets often grant access to external services such as cloud storage platforms, authentication servers, and internal APIs. If a bad actor gets hold of these credentials, they can potentially manipulate the app’s backend, steal user data, upload malicious content, or even hijack entire accounts.

The problem becomes even more severe when multiple apps reuse the same credentials or when those credentials have broad privileges, such as admin access to a cloud bucket or database.

The Cybernews Findings: A Snapshot of the Problem

The Cybernews research team analyzed a large subset of apps available on the Apple iOS Apps Store using automated static analysis and reverse engineering techniques. What they found was alarming.

More than 110,000 out of 156,000 apps analyzed contained at least one hardcoded secret. This means that a vast majority of popular, everyday iOS apps—including some that handle health, finance, and communication—might be exposing sensitive backend systems to the world. On average, each vulnerable app had over five secrets exposed in the codebase.

What kind of secrets are being leaked? Among the most commonly exposed were Firebase database URLs, Amazon S3 bucket links, Google Cloud project identifiers, Facebook app tokens, and even full OAuth client secrets. Some apps were leaking multiple combinations of these, opening themselves up to a range of sophisticated attacks.

Why Are Developers Hardcoding Secrets?

Understanding the “why” behind this trend is crucial to solving it. In most cases, hardcoded secrets aren’t included out of malice or neglect. They’re often the result of shortcuts taken during development or a lack of awareness about secure coding practices.

Developers working on tight deadlines may find it quicker to embed an API key directly into the app’s code for testing or debugging. In smaller teams or freelance projects, security best practices are often overlooked in favor of delivering features. Additionally, many developers learn by copying code snippets from online forums or GitHub repositories, which sometimes contain hardcoded secrets in example code.

Lack of proper build pipelines and secret management tools is another contributing factor. Without automated scans or environment-based configurations, it’s easy for secrets to be committed to version control systems and shipped with the final product.

The Real-World Implications

When an iOS app leaks its secrets, the consequences can be devastating. One of the most dangerous types of leaks involves access credentials to cloud storage platforms. Imagine a social media app that stores all user-uploaded photos and videos in an Amazon S3 bucket. If that bucket’s credentials are hardcoded and exposed, an attacker could download, delete, or replace all content in that storage.

Firebase database URLs are another major area of concern. If proper authentication isn’t enforced, attackers can use these URLs to view and manipulate entire datasets—including chat logs, user credentials, personal profiles, and payment history.

Then there are the monetization risks. Advertising IDs, Facebook tokens, and Google Cloud API keys can be used to generate fake ad clicks, impersonate the app, or drain the developer’s cloud service budget.

There have already been several incidents in the past illustrating the dangers of poorly protected mobile applications. The infamous XcodeGhost incident in 2015 allowed attackers to insert malware into hundreds of iOS apps through a tampered version of Apple’s Xcode IDE. Though it wasn’t about hardcoded secrets, it showed just how vulnerable mobile development environments can be. Similarly, Pegasus spyware demonstrated how zero-day exploits in iOS apps could be used to access virtually all data on a device.

How Attackers Exploit These Secrets

When a malicious actor finds an app with hardcoded credentials, they don’t necessarily need to act immediately. Many attackers run automated scanning tools that extract secrets from hundreds or thousands of apps. These secrets are then stored in databases and tested later—sometimes months down the line—when the app has grown in popularity.

Even if the credentials are expired or disabled, the damage might already be done. Data could have been copied, or users might have been phished using fake login portals created with the exposed OAuth IDs. In worst-case scenarios, the entire app could be cloned and modified to redirect data to an attacker-controlled server.

Attackers use open-source tools like Frida, Objection, MobSF, and class-dump to decompile iOS apps and search for embedded secrets. Once found, they often try brute-force combinations, test for misconfigured permissions, or use secrets to enumerate APIs and endpoints for further exploitation.

What Secrets Do iOS Apps Leak the Most?

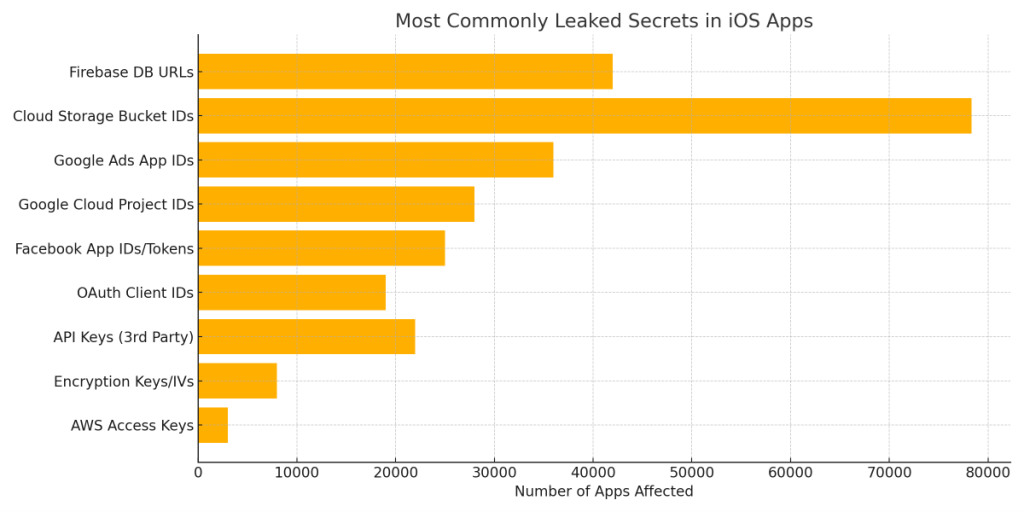

One of the most critical parts of the Cybernews investigation was identifying exactly which types of secrets were being exposed most frequently in iOS apps. Understanding this helps developers prioritize fixes and gives users insight into what kind of data might be at risk.

The most commonly leaked secret was the Firebase Realtime Database URL. Over 42,000 apps were found to contain references to live Firebase databases—many without proper authentication or access controls. This could allow attackers to access sensitive information such as chat messages, user profiles, or internal app configurations.

Next in line were cloud storage bucket identifiers, present in more than 78,000 apps. These identifiers, often linked to Google Cloud or Amazon S3, can be used to discover and access content stored online. If permissions aren’t tightly set, attackers can browse, download, or delete critical files.

Also frequently exposed were Google Ads App IDs, used for monetization and analytics, followed by Google Cloud Project IDs, which could be leveraged for reconnaissance or abuse of API quotas.

The investigation also revealed that many apps expose Facebook App IDs, OAuth Client IDs, and third-party API keys. These secrets often enable integrations for login, analytics, or communication, but when misused, they can be the starting point for impersonation or phishing attacks.

Most alarming, however, were instances of encryption keys, initialization vectors, and even AWS access keys embedded directly into the app code. Although less common, these leaks pose the most significant risk, as they can lead to full control over backend infrastructure or total compromise of encrypted user data.

Here’s a chart summarizing the most commonly leaked secrets in iOS apps:

As this chart illustrates, the issue spans a wide range of services and technologies. The high numbers aren’t limited to niche apps either—many popular and widely downloaded apps have been found with similar vulnerabilities. While not every secret leads to a direct exploit, their exposure dramatically increases the attack surface.

Best Practices for Developers and Organizations

Fixing this problem starts with acknowledging it—and implementing secure development practices throughout the software lifecycle. One of the most critical steps is moving away from hardcoding secrets in the first place.

Secrets should always be stored in secure, server-side environments, never inside the application itself. Developers should use secure vaults like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to manage and retrieve credentials at runtime. OAuth tokens and API keys should be scoped to the minimum required permissions and rotated frequently.

Moreover, static code analyzers and secret scanners should be integrated into CI/CD pipelines. Tools like TruffleHog, Gitleaks, and GitGuardian can automatically detect secrets before they’re committed to the codebase or released to production.

Education is equally important. Teams need to be trained on the risks of exposing secrets and encouraged to follow secure coding guidelines. In the mobile app space, referencing standards such as the OWASP Mobile Security Testing Guide (MSTG) can go a long way in building secure apps.

The Role of Apple in All This

While Apple is known for its strict security policies and robust sandboxing features, the prevalence of hardcoded secrets reveals a gap that needs to be addressed.

Currently, Apple reviews apps for behavioral violations, privacy issues, and user data handling—but it does not scan for embedded secrets. Integrating static secret detection into the App Store review process could help mitigate this widespread issue.

Apple could also offer better tooling in Xcode, alerting developers when secrets are hardcoded. Enhanced documentation, automated warnings, and access to cloud-based credential checks could further empower developers to avoid these mistakes.

What Can End Users Do?

If you’re an iOS apps user, you might be wondering how to protect yourself. While it’s difficult to detect whether an app has embedded secrets, you can take a few steps to reduce your exposure.

Only download apps from trusted developers and verified publishers. Avoid granting unnecessary permissions, especially for location, microphone, and camera. Review app privacy labels and settings regularly. Consider using a VPN, and always keep your iOS version updated to benefit from the latest security patches.

You should also periodically revoke third-party app access from services like Google and Facebook. If you see suspicious activity or data leakage from a particular app, report it to Apple and the developer immediately.

Looking Ahead

The Cybernews report is a major contribution to the conversation around mobile application security. It shows that even in walled gardens like the iOS ecosystem, vulnerabilities can thrive—often silently, and at scale.

We are moving toward a world where mobile devices store and transmit increasingly sensitive data. From digital wallets and biometric IDs to healthcare records and personal memories, everything is now in our pockets. That makes securing the apps we use more critical than ever.

It’s time for mobile developers to take secrets management seriously. The tools and knowledge are out there. What’s needed now is a shift in mindset—from shipping fast to shipping secure.

Conclusion

The discovery that over 70% of iOS apps are leaking hardcoded secrets is not just a technical issue—it’s a security crisis. Whether you’re a developer building the next big app or a user simply downloading a new productivity tool, awareness is the first line of defense.

With proper secret management, regular code audits, and a commitment to secure development practices, this problem is entirely solvable. Let’s hope this investigation serves as a turning point in how the mobile development industry views data protection and privacy.