How AI-Powered Agents Are Redefining Credential Stuffing and Evolving Cyber Threats

Hoplon InfoSec

04 Mar, 2025

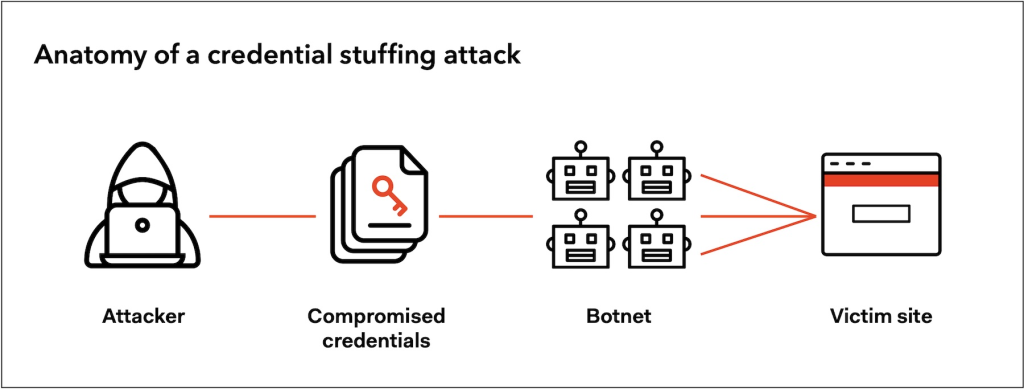

Credential stuffing, an age-old cyberattack method where attackers use stolen username-password pairs to break into online accounts, has been a persistent challenge in the digital world. While the basic concept has remained largely the same, the tactics and technologies used by cybercriminals have evolved rapidly, thanks to advancements in artificial intelligence (AI). With AI agents deployed by attackers, credential-stuffing attacks are becoming more sophisticated, scalable, and complex to detect. This shift transforms cybersecurity strategies, creating a pressing need for new defense mechanisms.

The Evolution of Credential Stuffing

Credential stuffing attacks have been a threat since the early 2000s, fueled by growing data breaches that expose millions of user credentials. In these attacks, cybercriminals use automated bots to exploit users’ tendency to reuse passwords across multiple platforms. By leveraging large databases of stolen login credentials, attackers would attempt to log into various services, banking on the assumption that people don’t change their passwords frequently.

Initially, the effectiveness of credential stuffing was limited by the sheer manual labor involved. Simple scripts could only try a few credentials at a time, and basic security measures like CAPTCHA, rate limiting, and IP blocking helped to slow down the onslaught of these attacks. However, with the rapid digital transformation and the rise of sophisticated authentication systems, attackers began facing hurdles when scaling up their campaigns.

Fast forward to today, and we are witnessing a drastic shift with the emergence of AI. Traditional automated scripts are being replaced by AI agents, which can learn from their interactions, adapt to defenses, and increase the efficiency of their attacks exponentially. This shift is leading to a surge in the scale and complexity of credential-stuffing attacks, creating an urgent need for organizations to rethink their cybersecurity strategies.

AI Agents: The New Frontier of Credential Stuffing Attacks

AI agents represent a new generation of automated tools that can mimic human-like behavior and interact with websites and applications in ways that traditional bots simply cannot. Unlike conventional bots, which are restricted to executing predefined tasks, AI agents can learn from each attack attempt, making them incredibly effective in evading security defenses and optimizing attack methods in real-time.

For instance, AI agents like OpenAI’s “Operator” can automatically navigate user interfaces, bypass CAPTCHAs, and adapt to different website layouts. These agents can handle simple login forms and interact with more complex multi-step authentication systems. This flexibility allows attackers to scale up their operations rapidly, targeting multiple services and bypassing conventional security mechanisms that were once effective at halting traditional credential-stuffing attacks.

The ability of AI agents to perform at such a high level of sophistication is reshaping the threat landscape. Where traditional credential stuffing attacks would often fail due to defense mechanisms like CAPTCHAs, rate limiting, or IP blocking, AI agents are more adept at simulating human-like behavior, allowing them to slip through unnoticed.

How AI Agents Work in Credential Stuffing Attacks

AI-driven credential stuffing attacks are highly versatile and can precisely execute large-scale attacks. Here’s how they typically work:

- Data Gathering: AI agents begin by collecting large datasets of stolen login credentials. These credentials could come from data breaches, phishing attacks, or leaks on the dark web. Since many people reuse passwords across different platforms, these stolen credentials can be used to attack multiple services.

- Adaptive Learning: Once the AI agent has a list of stolen credentials, it starts attacking multiple websites. Unlike traditional bots, the AI agent learns from its failures. If an attempt to log in fails, it adjusts its approach by analyzing the website’s response and determining the following steps to bypass security measures like CAPTCHA or IP blocking.

- Human-Like Interaction: To further evade detection, AI agents can mimic human behaviors like mouse movements, typing speed, and scrolling. This helps them blend in with legitimate user traffic, making it far harder for traditional security systems to flag their activities.

- Continuous Scaling: Once the AI agent successfully breaches one account, it doesn’t stop there. It continues to refine its techniques and can scale up the attack on other accounts highly automatedly. These agents can process millions of login attempts in a fraction of the time it would take a traditional bot, making them significantly more dangerous.

Implications for Cybersecurity

The rise of AI-driven credential-stuffing attacks presents several significant challenges for cybersecurity professionals:

- Increased Attack Success Rates: AI agents are far more efficient at identifying and exploiting weak or reused passwords. As they learn from failed login attempts, their success rate improves rapidly, leading to higher compromised accounts. With millions of stolen credentials available on the dark web, attackers can conduct highly effective campaigns without much effort.

- Evasion of Traditional Defenses: Classic defenses like CAPTCHA, IP rate limiting, and user-agent filtering are becoming increasingly ineffective against AI agents. Since these agents can simulate human behavior, they bypass mechanisms to detect and block automated traffic. Additionally, AI agents can evade geographic or behavioral analysis systems by distributing their attacks across multiple IP addresses and using sophisticated proxies.

- Massive Scale and Speed: The most significant advantage of AI-driven credential stuffing attacks is their scalability. AI agents can attempt millions of login attempts across a range of websites in hours, something previously impossible with traditional bots. As a result, the scale of these attacks is expanding, and organizations are struggling to keep up with the volume.

- Advanced Evasion Techniques: AI agents continuously evolve, using advanced techniques to refine their real-time strategies. They can adapt to different websites, bypass security systems, and optimize their attacks based on feedback, making preemptively stopping attacks difficult. This constant evolution creates a cat-and-mouse game for security professionals defending against these sophisticated agents.

Defensive Strategies in the Age of AI

As AI-driven credential stuffing attacks evolve, so too must the defense strategies of organizations. Here are several steps businesses can take to counter these threats:

- Advanced Bot Detection Systems: To counter AI-based bots, organizations should implement advanced bot detection systems that use machine learning algorithms to analyze behavioral patterns. These systems can detect subtle anomalies in user interactions—such as irregular typing speeds or mouse movements—indicative of automated attacks.

- Multi-Factor Authentication (MFA): One of the most effective ways to protect accounts is by requiring multiple forms of authentication. Even if an attacker can steal a user’s credentials, they would still need access to another form of authentication—like a one-time passcode or biometric verification—to successfully log in.

- Credential Monitoring and Breach Detection: Implement continuous monitoring of user credentials to detect when they are exposed to a breach. Services like HaveIBeenPwned or password managers with breach alert features can notify users when their credentials are compromised, allowing them to secure their accounts quickly.

- User Education: It’s crucial to educate users about the dangers of reusing passwords and the importance of creating strong, unique passwords for each account. Encouraging password managers can help users maintain complex, unique passwords without needing to remember each one.

- Rate Limiting and Traffic Analysis: Implement rate limiting and traffic analysis to block suspicious login attempts. By monitoring login attempts and analyzing real-time patterns, organizations can quickly identify and block attacks before they succeed. Combining this with behavioral analysis can further reduce the effectiveness of AI agents.

- AI and Machine Learning in Defense: Just as attackers use AI, so can defenders. Machine learning algorithms can detect abnormal activity and respond to threats faster. AI-based defenses can analyze large traffic volumes, quickly identify patterns, and adjust defense mechanisms in real-time.

Conclusion

Integrating AI into credential-stuffing attacks represents a dramatic shift in the landscape of cybersecurity threats. These AI agents can bypass traditional defenses and scale attacks to previously unthinkable levels. This means that organizations must evolve their security measures to match the sophistication of the threats they face. By using advanced AI, machine learning techniques, and fundamental security practices like multi-factor authentication, businesses can stay ahead of attackers and protect their users from this growing menace.

As attackers continue to innovate with AI-driven methods, it is essential for organizations to proactively strengthen their defenses and adapt to the changing cybersecurity landscape.

Share this :