AI Assistants as Malware C2 Proxies: Copilot and Grok Abuse

Hoplon InfoSec

18 Feb, 2026

Wait… Can AI Tools Actually Be Used for Malware?

In February 2026, security researchers demonstrated something that honestly made many people in cybersecurity pause for a second. They showed that tools like Microsoft Copilot and xAI’s Grok could potentially be abused as hidden communication channels for malware. In simple words, attackers might use AI assistants as malware C2 proxies instead of traditional hacker servers.

That sounds dramatic, I know. But here’s the important part. This was not a confirmed breach. No official reports say Copilot or Grok were hacked. What researchers showed is a technique. A method. A possibility.

And it matters right now because AI tools are everywhere. They’re inside browsers, office software, developer tools, and even social platforms. If attackers can use trusted AI services to quietly relay commands, that changes how we think about cybersecurity.

What Happened: Researchers Tested a New Abuse Technique

Let me explain this the way I’d tell a friend over coffee.

Security researchers built a proof of concept. They didn’t hack Copilot. They didn’t break into Grok. Instead, they asked a different question. What if malware on an infected computer used an AI chatbot as a middleman to talk to the attacker?

That’s where AI Assistants as Malware C2 Proxies come in.

Normally, malware connects to a suspicious server. Security systems look for weird domains, unknown IP addresses, or strange outbound connections. That’s how many attacks get caught.

But in this case, the infected machine connects to a trusted AI platform using normal encrypted HTTPS traffic. It looks like a regular user interacting with Copilot or Grok.

There is no publicly confirmed CVE tied to this research. No official breach announcement. In fact, it’s important to say clearly that this appears to be unverified or misleading information in terms of real-world widespread attacks, and no official sources confirm its authenticity at scale. What we’re looking at is technical feasibility.

Still, feasibility matters. In cybersecurity, if something can be done, eventually someone will try it.

Why Would Attackers Even Do This?

Here’s the thing about attackers. They love blending in.

Traditional command and control infrastructure is risky. You register a shady domain. It gets flagged. You host a server in a questionable region. It gets blocked.

Now imagine you can route your malware traffic through a domain that companies already trust and allow.

That’s the appeal of AI Assistants as Malware C2 Proxies.

There are a few practical reasons this idea exists:

AI platforms use encrypted HTTPS by default.

Their domains are trusted and widely allowed in corporate firewalls.

Traffic to AI tools is becoming normal in enterprise environments.

Think about it. If your company uses Microsoft Copilot across departments, outbound traffic to Microsoft AI endpoints is expected. No red flags. No alerts. Just business as usual.

Attackers have done similar things before with cloud storage, social media platforms, and messaging apps. This is just the next evolution.

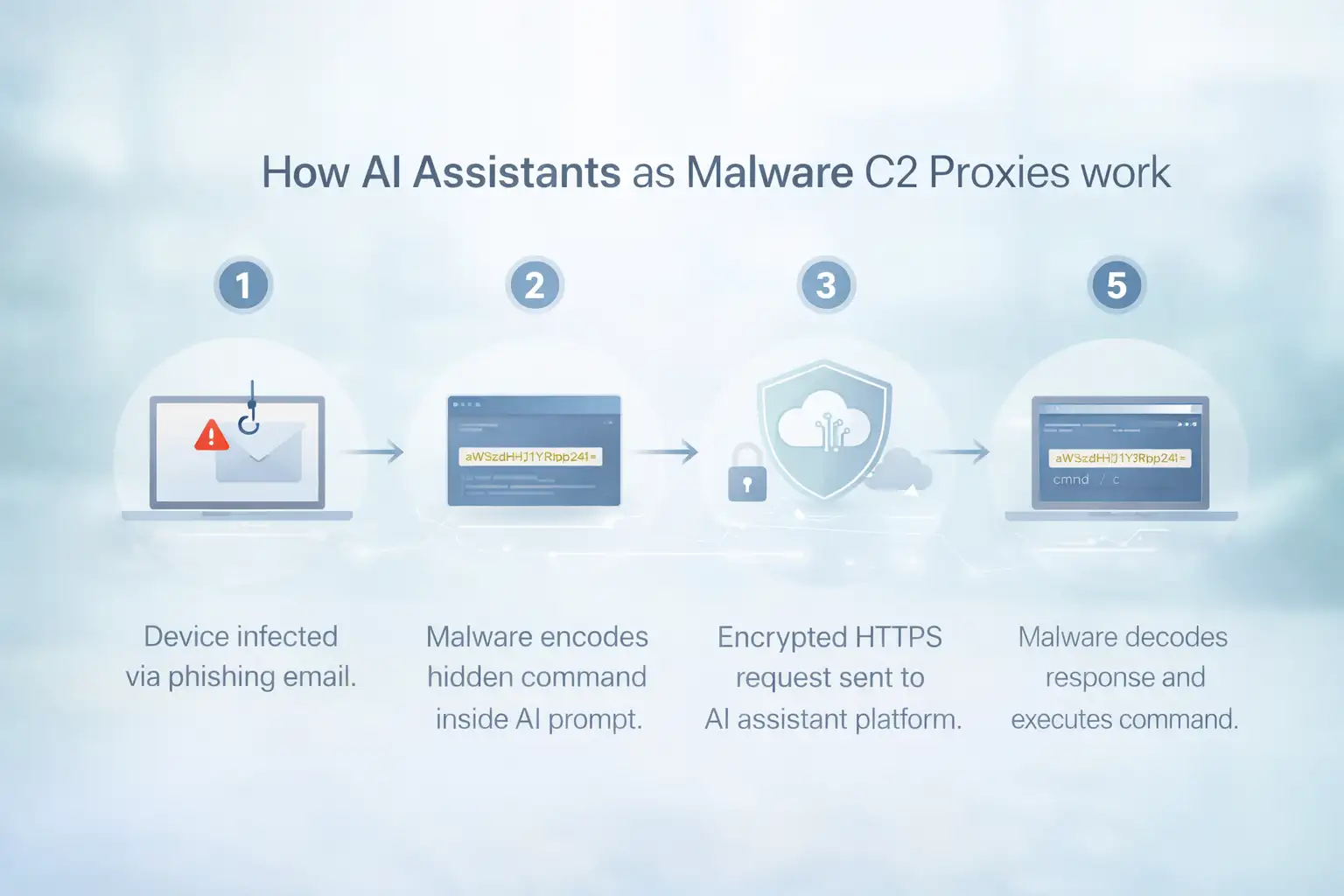

How It Works: Step by Step, Without the Hype

Let’s break it down clearly.

Step 1: The Device Gets Infected

This part doesn’t change. Phishing email. Malicious download. Fake update. The usual suspects.

The AI platform is not involved here. The infection happens the old-fashioned way.

Step 2: The Malware Encodes Instructions in Prompts

Instead of contacting a suspicious domain, the malware sends a carefully structured message to an AI assistant.

It could look like a normal question. But hidden inside might be encoded data. Maybe base64. Maybe some disguised text pattern.

It seems like someone asked Copilot a question on the network.

Step 3: The AI answers.

The AI takes in the input and sends back a response.

The AI processes the input and returns a response. In the proof of concept, attackers designed prompts in a way that allowed the response to carry encoded instructions back.

The AI does not know it’s being used this way. It simply generates output based on the input.

This is why AI assistants as malware C2 proxies are about abuse, not compromise.

Step 4: The response is decoded by the malware.

The AI response is read by the infected system, which then pulls out the hidden command.

That command might instruct it to download another file, collect data, or perform another action.

All while appearing to just talk to a chatbot.

Clever? Yes. Confirmed widespread threat? Not yet.

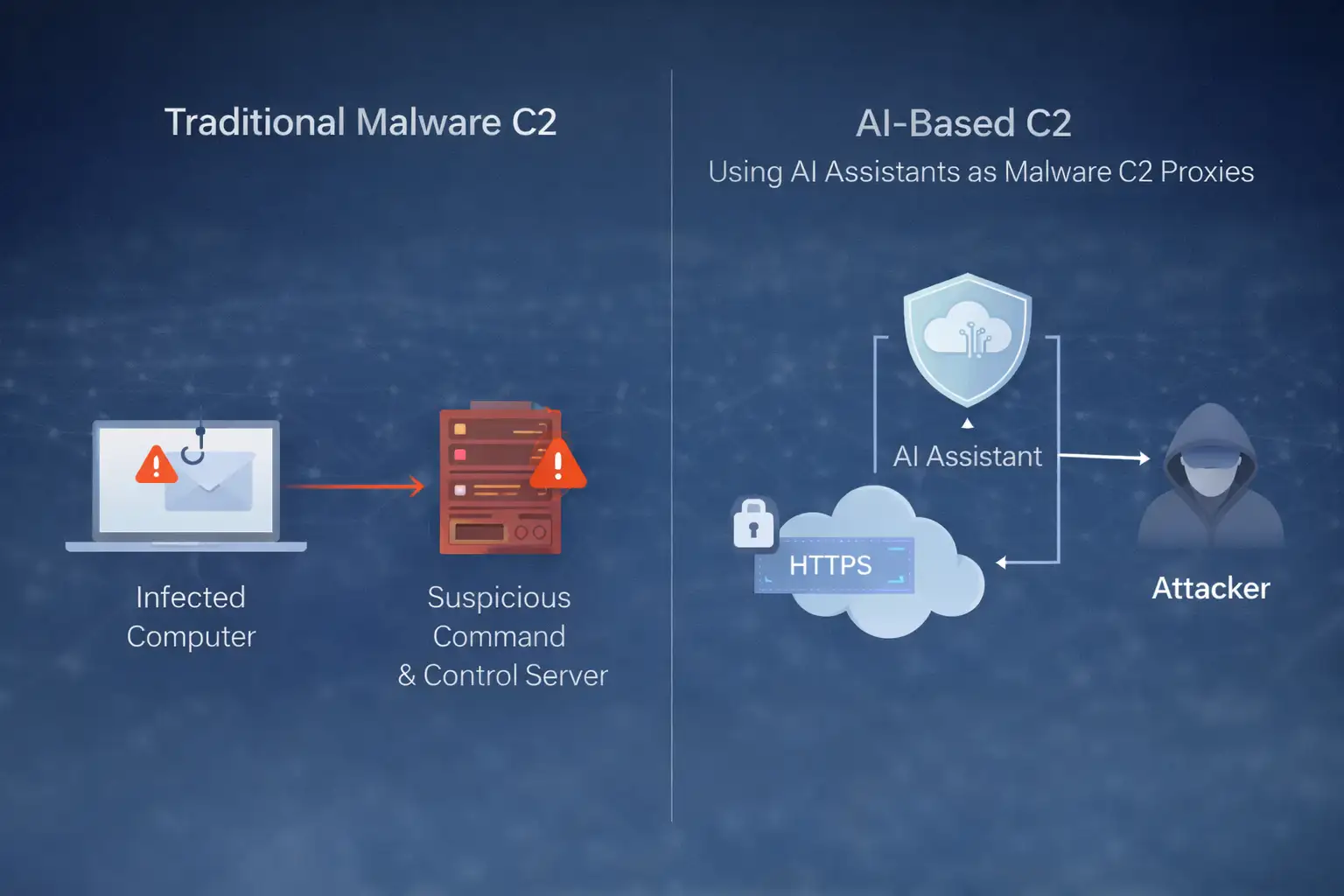

Before vs. After: Why This Is Different

Let’s compare two scenarios.

Before AI-Based C2

An infected computer starts talking to a domain registered two days ago. Security tools detect unusual DNS requests. Alerts fire. The security team investigates.

It’s obvious something is wrong.

After Using AI Assistants as Malware C2 Proxies

Now the infected computer talks to a major AI platform. A domain the company already trusts. One that thousands of employees use daily.

From a network view, it looks normal. Encrypted. Frequent. Expected.

That’s the subtle shift. Not louder attacks. Quieter ones.

This doesn’t mean AI platforms are insecure. It means attackers look for trusted infrastructure to hide behind.

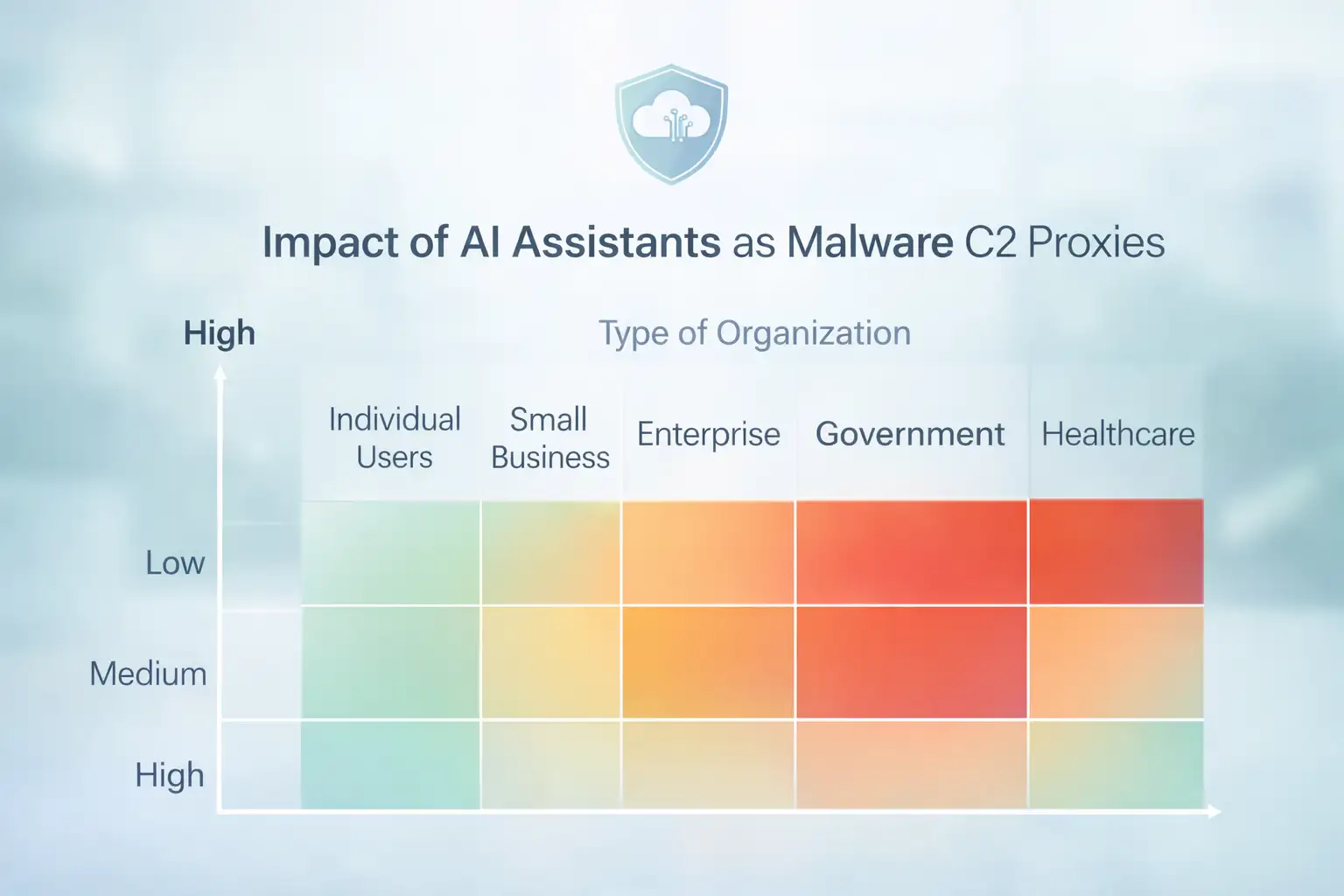

Who Should Actually Care About This?

Let’s be honest. Not every home user needs to panic.

Regular Users

If you’re just chatting with Copilot occasionally, you’re not suddenly at high risk because of this research.

Your biggest risks are still phishing emails and weak passwords.

Businesses

This is where it gets serious.

Enterprises that heavily use AI services create consistent traffic patterns. That makes it easier for attackers to hide malicious communication inside legitimate streams.

If sensitive data is involved, the stakes increase.

Security Teams

For security professionals, this is a mindset shift.

Blocking suspicious domains is no longer enough. You need behavioral detection. You need to notice when something automated is interacting with an AI service in a way humans don’t.

That’s a harder problem.

Benefits and Limitations: Let’s Keep It Balanced

It would be easy to turn this into a fear story. But that would be misleading.

AI tools bring real productivity benefits:

Faster document drafting

Code assistance

Knowledge summarization

Workflow automation

Those advantages don’t disappear because researchers demonstrated AI assistants as malware C2 proxies in a lab.

There are also some limits, though:

• AI traffic can mix bad and good use.

• Encrypted channels make things less visible.

• If not set up correctly, API access can be automated.

At the time of writing, there is no confirmed global campaign abusing Copilot or Grok in this exact way. The research shows potential, not active widespread exploitation.

That distinction matters.

Common Misunderstandings

Let’s clear up a few things.

First, this does not mean Copilot or Grok were hacked. No verified evidence supports that claim.

Second, turning off AI tools entirely is not a silver bullet. Attackers adapt. If AI channels are blocked, they will use something else.

Third, encryption is not the villain. HTTPS protects users. The challenge is identifying abnormal behavior inside allowed services.

What Should You Do Right Now?

If you’re an individual user, keep doing the basics well.

Keep your system updated.

Avoid downloading suspicious files.

Be cautious with browser extensions and unknown integrations.

If you’re running a business, think deeper.

Monitor unusual API usage patterns.

Log AI interactions where possible.

Restrict automated scripts from freely accessing AI APIs.

Use endpoint detection tools that analyze behavior, not just domains.

And talk to your AI vendors. Ask what logging options are available. Transparency is key as AI adoption grows.

A simple network diagram comparing traditional command and control with AI-relayed communication can help security teams visualize this risk.

Frequently Asked Questions

Can AI assistants really control malware?

Not directly. They are not built for that. However, researchers have shown that attackers can structure communication so that AI systems unintentionally act as relays.

Is there a CVE for this issue?

As of now, no specific CVE is publicly linked to Copilot or Grok regarding this technique.

Are companies banning AI tools?

There is no evidence of widespread bans. Some organizations are reviewing policies and monitoring strategies.

Has this been seen in real attacks?

No official confirmation exists of large-scale real-world campaigns using AI assistants as malware C2 proxies so far. The concept is based on controlled research demonstrations.

Final Thoughts: The Real Lesson Here

If you ask me what this research really means, I’d say this.

Technology evolves. Attackers evolve with it.

AI Assistants as Malware C2 Proxies is not a reason to fear AI. It’s a reminder that any widely trusted system can become part of an attacker’s toolkit.

The real shift is in mindset. We can’t rely only on blocking suspicious domains anymore. We have to understand behavior. Context. Patterns.

AI is not the enemy. Blind trust is.

And as AI tools become even more integrated into daily life, this conversation is only going to grow.

If your organization relies on AI-powered tools, now is the time to review your monitoring strategy. Share this analysis with your security team and evaluate whether your detection systems are ready for this emerging threat model.

For more latest updates like this, visit our homepage.

Share this :