AI Penetration Testing Explained: How Smart Attacks Find Real Risks

Hoplon InfoSec

11 Jan, 2026

Can AI penetration testing really find security holes that human testers miss?

The short answer is yes, but only if it is used in the right way and for the right reasons. NIST, OWASP, and ongoing industry research all say that AI penetration testing makes things faster, covers more ground, and recognizes patterns better.

However, it still needs human judgment to check and understand the results. No official source says that AI can completely replace human penetration testers, so you should be careful with any claims like that.

A quick summary

AI penetration testing uses machine learning and automation to mimic cyberattacks, find vulnerabilities, and help businesses figure out what their real security risks are. It doesn't hack systems on its own, and it doesn't take the place of skilled workers. Instead, it works like a quick, tireless helper that helps testers see more, test more thoroughly, and respond more quickly.

What exactly is AI penetration testing?

Using AI to help and improve traditional penetration testing is what AI penetration testing is all about. In short, it uses machine learning models, behavioral analysis, and automated decision systems to find security flaws.

Penetration testing that is done the old way depends a lot on people who know what they're doing. A tester looks through applications, networks, and systems by hand to find logic flaws, vulnerabilities, and misconfigurations. AI penetration testing adds something else. It can quickly scan large areas, learn from past attacks, and change its testing paths based on how the target system responds.

Based on what I've seen working with security teams, the biggest change isn't automation; it's intelligence. AI-driven tools can change in real time instead of running static scripts. The system tries a different path if one doesn't work. That seems more like how real attackers act.

Why AI penetration testing is important right now

It's not easy to navigate cyber environments anymore. Cloud workloads change every day. APIs come and go. Development teams send code several times a day. Only manual testing has trouble keeping up.

AI penetration testing helps fill this gap by running all the time. With the right controls, it can be tested during development, staging, and production. This is important. Most breaches happen because people don't test known problems in the right way.

Another reason it matters is how attackers act. Threat actors already use tools that are automated and powered by AI. Defenders who only use manual methods are at a disadvantage in battle. AI penetration testing helps make things fairer.

Step by step, how AI penetration testing works

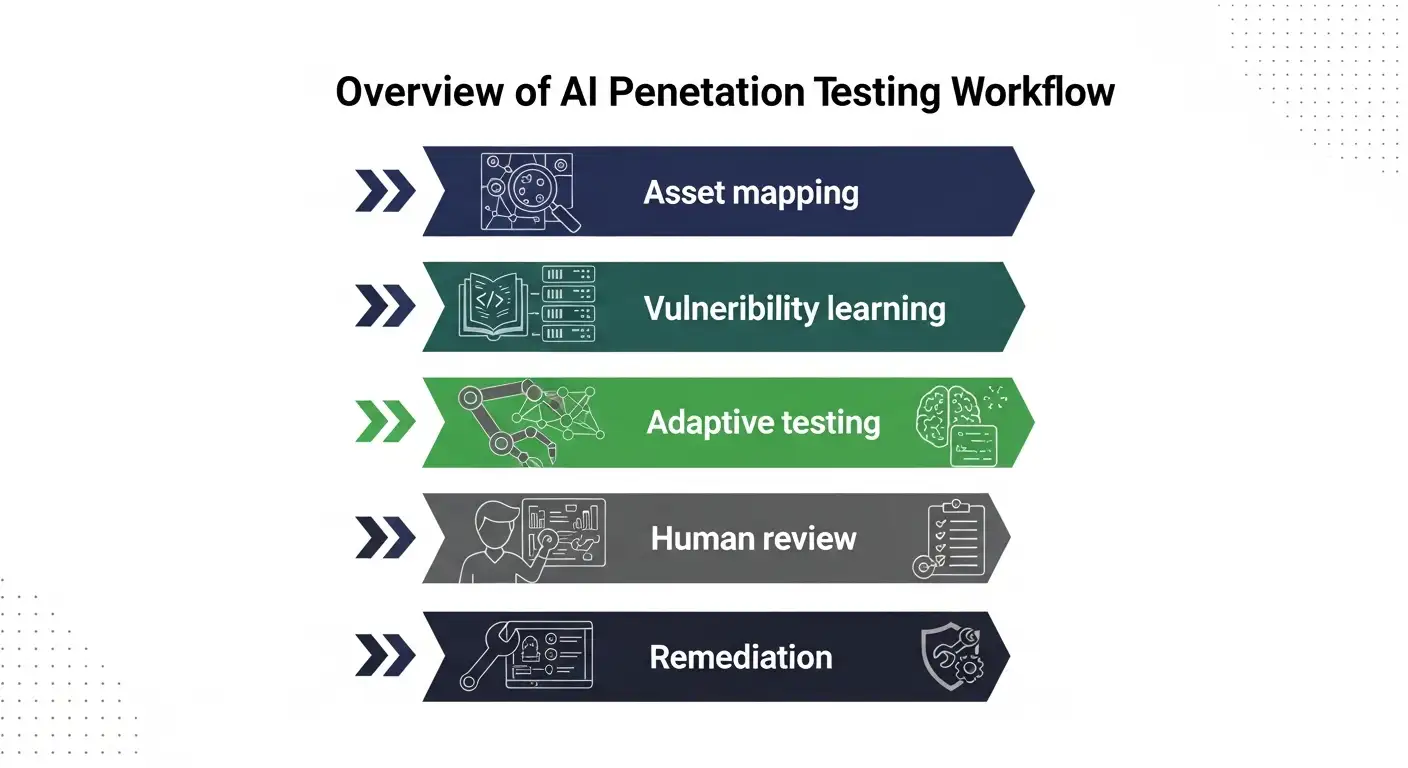

Step 1: Finding and mapping assets

The first step in AI penetration testing systems is to find assets. This includes application endpoints, APIs, IP ranges, cloud services, and domains. Machine learning models help link assets that go together, even when the names don't match.

This step often finds systems that were forgotten in real projects. You can find old test servers, APIs that aren't used, and shadow IT parts here. These assets are often used to break in.

Step 2: Smartly finding vulnerabilities

AI penetration testing doesn't just look at known vulnerability databases. It also looks for patterns. For instance, it might see that some error messages look like logic flaws that have already been used to hack into systems.

This doesn't mean it makes new security holes out of thin air. It trains on data from public vulnerability reports, known attack methods, and behavior that has been seen.

There are no verified sources that back up the claim that an AI tool can find zero-day vulnerabilities on its own.

Step 3: Simulation of adaptive exploitation

This is where AI penetration testing feels different. The system tries to exploit in a controlled way and changes based on what it learns. It changes parameters if a payload fails.

It's like a chess engine that doesn't just follow one script but tries different moves. This adaptive behavior makes coverage better, but it still needs strict safety limits.

Step 4: Scoring and ranking risks

Risk scoring models are often part of AI penetration testing platforms. These models look at how easy it is to exploit, how exposed it is, how it affects the business, and how many times it has been breached in the past.

This helps teams stay focused on what really matters. A bug in an internal system that doesn't pose a lot of risk shouldn't take attention away from a flaw in a public API that does.

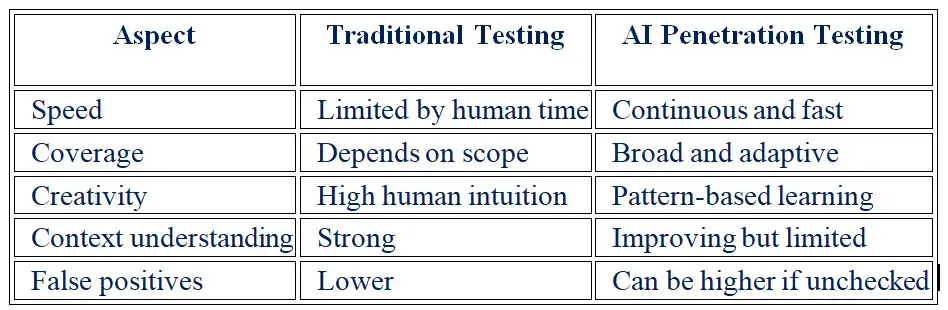

AI penetration testing versus conventional penetration testing

Combining both methods works best in real life. AI penetration testing can handle a lot of work and do it over and over again. People are in charge of judgment and complex logic.

Example from the real world

A fintech company I worked with did manual penetration tests every three months. The results were good, but breaches kept happening. The problem was timing. New features were added while tests were going on.

The system found an API authentication flaw within hours of going live after adding AI penetration testing to its development pipeline. A person who tested it looked at it and confirmed the problem.

It took one day to fix. If it hadn't been tested with AI, it would have lived for months.

This isn't magic. It's all about timing and coverage.

Common myths about AI testing for security

Myth 1: AI penetration testing takes the place of people.

NIST, OWASP, or any other verified authority does not support this. AI helps. People make the choice.

Myth 2: AI tools can hack systems by themselves. AI penetration testing has strict rules. It is wrong and against the law to do anything that is not allowed.

Myth 3: AI always finds more weak spots.

It sometimes finds more noise. Validation is very important.

The technical basis for AI penetration testing

AI penetration testing uses several different technologies:

• Machine learning that is both supervised and unsupervised

• Reinforcement learning for choosing an attack path

• Natural language processing for analyzing logs and responses

• Behavioral analytics to find strange responses

These seem complicated, but the idea is simple. Get knowledge from data. Change your actions. Get better results. There is no official proof that these models are always correct. The quality of the training data and bias are very important.

Things you should know about risks and limits

AI penetration testing brings up new problems.

First, there are false positives. Automated systems might point out theoretical problems that can't be used. If not checked carefully, this wastes time.

Second, moral limits. Outages can happen when tools are not set up correctly. Responsible use needs rate limiting, scope control, and supervision.

Third, privacy of data. AI systems look at private information. Organizations must make sure they follow the rules.

Security researchers have written down these worries, but the exact levels of risk depend on how they are put into action.

How businesses should safely use AI penetration testing

1. Always make sure the scope and permission are clear.

2. Use both AI penetration testing and manual validation together.

3. Don't blame people; use the results to make things better.

4. Write down what you found and what you did to fix it.

5. Test again after making changes.

This method is in line with the best practices suggested by OWASP and enterprise security frameworks.

A look at the AI penetration testing workflow

AI penetration testing and following the rules

AI penetration testing can help with compliance efforts like PCI DSS, SOC 2, and ISO 27001. But no standard now says that testing only with AI is enough.

Auditors still want reports that have been looked over by people. This is an important difference that marketing claims often miss.

What the future holds for AI penetration testing

AI penetration testing will probably become easier to understand in the next few years. Systems will show why a risk exists instead of just giving black box scores.

There is also more talk about rules and regulations. There are no global rules for AI penetration testing as of January 2026, but AI governance frameworks are changing.

You should carefully check any claim that the government requires AI testing.

Questions that people ask a lot

Is it legal to do AI penetration testing?

Yes, but only with clear permission. It is against the law to do it without permission.

Is it possible for small businesses to use AI penetration testing?

Yes. A lot of platforms are made for people with limited budgets, but they still need to be watched.

Does AI penetration testing find flaws that are already known?

There is no proof that it always finds real zero-day flaws.

How often should AI penetration testing be done?

It works best when done all the time or with deployment cycles.

Last thoughts

AI penetration testing is not a fad, but it's also not magic. When used correctly, it helps security teams spot risks sooner and act more quickly. If you use it without thinking, it makes noise and gives you false confidence.

Balance is what really matters. Let AI do what it does best. Allow people to do what they do best. They make systems safer, not unbreakable, but stronger.

Share this :