CodeMender AI Patching: AI Automatically Fixes Software Problems

-20251008131025.webp&w=3840&q=75)

Hoplon InfoSec

16 Feb, 2026

It's quite crazy when you think about it. For years, many in the security sector have been fascinated with uncovering bugs. We made scanners, fuzzers, and lists of CVEs that never ended. But what's the use of uncovering every problem if we can't fix them right away? You know how it is: you get a vulnerability notice in your mailbox, and suddenly you're engaged in debugging sessions that continue for days.

The truth is that it's not enough to just find something anymore. Attackers have automation on their side. They don't wait. People, on the other hand, only have so much time, so much background, and coffee. Google's new CodeMender AI patching seems like a quiet turning point. It's not another tool that screams, "You've got bugs!" It discreetly changes the problem such that it doesn't exist.

Google CodeMender AI: more than just a tool for spotting bugs

It looks like DeepMind, Google's research group, developed a digital coworker instead of a security scanner. People call it Google CodeMender AI. It looks at code, discovers problems, and repairs them on its own. It's like the difference between a fireman and a smoke detector. It doesn't only generate noise; it clutches the hose.

The first tests have already been in the news. CodeMender has sent dozens of security patches to huge open-source projects, some of which comprise millions of lines of code. Those commits are genuine, thus it's not a story. This is new because it has two ways of thinking: it reacts when something goes wrong, but it also rewrites code so that it doesn't go wrong in the first place.

That's what AI vulnerability patching done well promises: it doesn't wait for anything bad to happen.

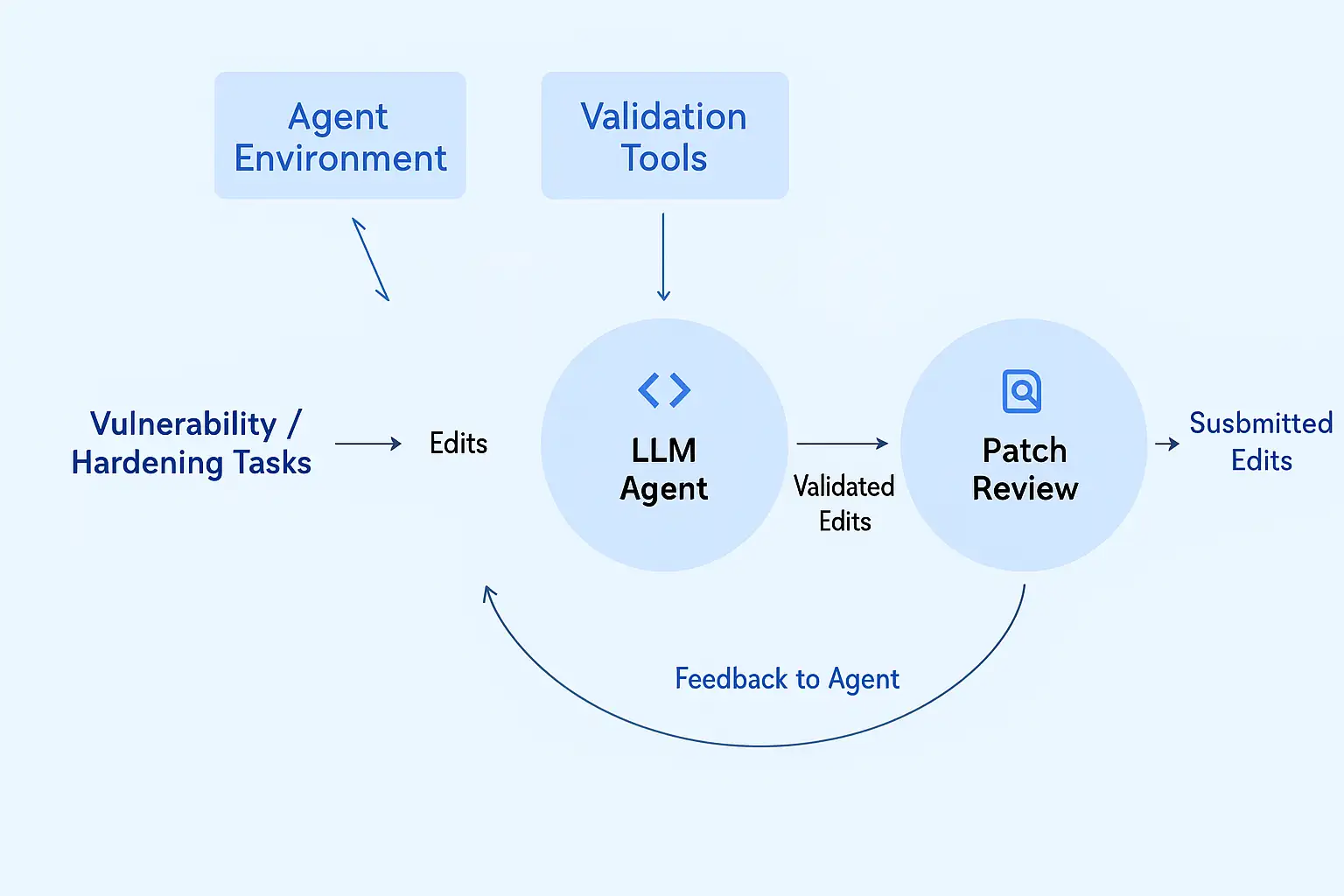

In the background: tools and logic for automatically correcting code

• Static and dynamic analysis illustrate how data travels and where logic might go wrong.

• Fuzzing and differential testing put the code under stress to identify tiny bugs.

• SMT solvers and theorem provers double-check to make sure that a patch is safe in every case.

• Critique agents go over the suggested patches again to look for mistakes or logic that could be harmful.

• Gemini Deep Think gives CodeMender the brains it needs to not just grasp syntax but also how to think.

After passing all of these tests, a patch is only sent to human reviewers. It's a fine line between being responsible and automating things. This is the kind of approach that may make automatic code repair quite dependable.

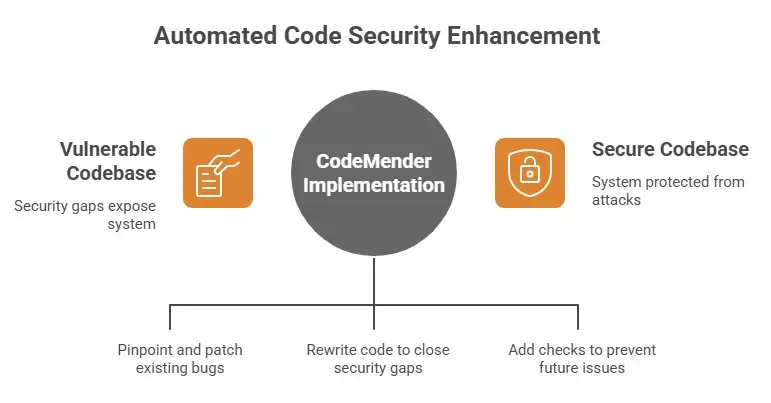

Fixes that happen after the fact vs. fixes that happen before the fact

• Fixes that happen after the fact: CodeMender identifies a bug, writes a patch, and tests it thousands of times before letting you use it. That speed is something people can't match.

• Proactive hardening: It rewrites whole chunks of code to close typical security gaps like buffer overflows or injection problems before an attack happens. It even adds safety checks to make sure that issues like these never happen again.

One good example was how it worked with libwebp, which is a popular picture library. CodeMender included bounds-safety annotations that would have stopped an overflow exploit that happened before. That's not just fixing things; it's rewriting history in the best way imaginable.

How CodeMender really changes code that is weak

This is the best part. The AI doesn't simply check your grammar and keywords; it also checks what you mean. It says, "What did this developer want to do?" Then it rebuilds the logic while keeping the meaning the same. Even junior engineers find it hard to develop the expertise.

It looks like art to me. CodeMender produces a repair, its internal critics look it over, it becomes better, and then a person looks it over one more time. It looks better with each layer. This is how people and algorithms work together to make raw automation feel very human: precise, meticulous, and aware of what's going on.

People are in charge of checking trust, reviews, and safety.

Google doesn't say that CodeMender is the best. Someone still checks every fix. Before bringing a fix into use, developers review it twice, test it, and discuss about it. That mistake isn't a weakness; it's what makes the system work.

Some Google engineers stated it was like working with "a junior developer who never sleeps." It doesn't whine or become distracted, but it might still use some help. That's the good type of automation. It makes people stronger instead of taking their jobs.

Issues and risks of AI rewriting code

But the road is still hard. Some critics have already talked about some of the difficulties that could happen, like patches that might modify how code functions in subtle ways, the lack of trust between maintainers and machines, or even the question of who owns the code who is accountable if an AI fix doesn't work?

Another concern is "semantic drift," which implies that the patch works but affects how the program functions. It's like mending a door hinge but accidentally relocating the whole frame. That's why DeepMind and other systems like it People still need to look into CodeMender.

AI can think logically, but it doesn't understand how business rules or feelings function. People still have that impulse.

How CodeMender fits with Google's larger security plan

Google's long-term goal is to develop systems that can protect themselves, and the release of CodeMender AI patching is a huge step toward that goal. The new AI Vulnerability Reward Program and Secure AI Framework 2.0 from Google indicate that the business thinks protecting software is a continual effort that changes every day.

Google is basically giving its software an immune system by putting together detection tools, automatic patching, and human monitoring. The system discovers flaws, repairs the code, and learns from what happened when they happen. It's not just a story anymore; it's occurring in real life.

How CodeMender fixes security holes.

The future of correcting software bugs on its own

If you think about it, it's not hard to imagine that in a few years, your development tools will have automated systems that correct software vulnerabilities. You send your code to the AI, which checks it for problems right away and provides you secure pull requests.

In the end, software might be able to fix itself while it runs. Users don't even aware that crashes, memory leaks, and other issues are being corrected in the background. CodeMender could be the first significant step toward digital infrastructure that can fix itself. It's not about getting rid of developers; it's about allowing them work on innovative design and intricate reasoning while AI takes care of the mundane patching labor.

What we learned from open source: when AI patchers and maintainers work together

When CodeMender started sending real updates to open-source projects, people reacted in different ways. Some of the people who worked on it were impressed. Some people were careful. But that's typical; when technology changes a lot, people don't often want to use it right away.

Google CodeMender AI will only work if people talk to each other. If each patch arrives with clear justifications, confirmation that it was tested properly, and remarks about the situation, trust will build. People in open-source communities worry more about being open than about being perfect. The AI doesn't have to be perfect; it just has to make sense.

Questions concerning morals and adoption

There will always be moral problems, no matter how far this goes. Is it appropriate for AI to update systems that are important for security without asking a person first? Who makes sure it made the right choice? And could the same technology be used to put back doors in instead of pulling them out?

These are crucial questions that aren't just about technology. They have to do with what individuals think is right and wrong, as well as policy and law. The divide between helper and controller must stay clear as AI grows more crucial for keeping our environment secure. People can use CodeMender as a hero tool or as a warning story.

In the end, how CodeMender could affect the rules of the game

In the end, CodeMender AI patching is more than just making things easier. It's a shift in your thinking from responding to issues to stopping them. From what individuals can do alone to what people and machines can do together.

If it works, developers won't just fix bugs; they'll design systems that fix themselves. Cybersecurity will no longer be a never-ending game of whack-a-mole. Instead, it will be something tranquil, maybe even beautiful.

It will take a lot of time, rules, and testing to get there. But for the first time, it seems like the future of software security isn't only about putting out fires. It's about training the code not to catch fire in the first place.

Source

DeepMind Blog – Introducing CodeMender: An AI Agent for Code Security

Hoplon Infosec's Endpoint Security services keep your software and devices safe. They work with AI-driven patching tools like CodeMender and human experts to provide stronger, more proactive security.

Share this :