What You Must Know About 18 Hacked npm Packages With 2B Weekly Downloads

Hoplon InfoSec

09 Sep, 2025

Hacked npm packages

The developer community got a rude awakening when a long-trusted maintainer was phished and attackers put bad code into a group of libraries that are used by a lot of people. The breach did more than just break builds. It quietly tried to steal money by changing code that is often found in browser bundles and developer tools. This kind of attack shows that even small tools can lead to real-world losses when used incorrectly and that the code we all take for granted in our appliances can suddenly act like malicious software. People are posting a blunt and scary phrase in threads: “hacked npm packages.”

There was a simple emotional reaction behind the headlines: anger mixed with a cold technical assessment. Where can you find trust in a global package registry? Which checks didn’t work, and which ones kept people from having worse results? Those are the real questions teams are asking as they rush to look through repos and CI logs.

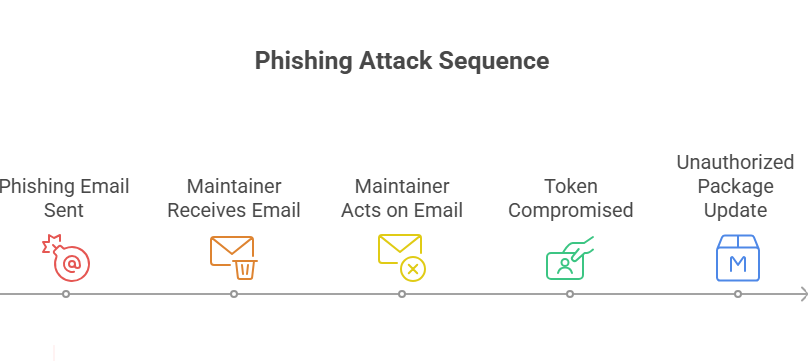

What really happened: the timeline of the agreement

On September 8, 2025, security teams started to notice a problem with the supply chain: a trusted npm maintainer account had been hacked, and modified versions of popular packages were made available. Researchers and vendors said that attackers used a realistic phishing message to get credentials and a publishing token. They then published malicious releases that included code to steal cryptocurrency transactions in the browser and developer secrets in build contexts.

The event happened very quickly. Researchers who looked into the packages found that the injected payloads were very specific: they were made to run in client-side bundles and look for wallet signatures and transaction flows. Vendors and platforms saw the attack vector and sent out emergency instructions to their customers. Once the problem was found, the registries took down the infected versions, but the exposure time was long enough to require wide scans and fixes.

A useful list of the packages that were affected and why they matter

Public write-ups and vendor advisories listed a group of basic libraries that are used by all build tools and runtime stacks. The changed packages had well-known tools for formatting, debugging, and color in JavaScript environments. Researchers found that 18 packages were affected by the incident, including chalk, debug, and a few other related modules.

People don’t usually think about those parts when they think about security. They are not headline features; they are dependency plumbing. That is what makes the compromise so dangerous: the change goes down dependency trees into thousands or millions of apps, and often no one looks at the exact code that was added to a production bundle.

How the attackers got in: phishing and stealing tokens explained

Investigations show that the phishing message pretended to be npm support and used a domain that looked like npm’s to make it seem urgent and real. The email told the maintainer to reauthenticate or give a session token. Once the attackers had that token, they could publish new versions of the package. This is social engineering that takes advantage of trust in a process that is thought to be safe.

This isn’t a new playbook; it’s one that the enemy improved. Attackers spend time gathering information and writing emails that are hard to spot. When maintainers are in a hurry or the message looks just like a support prompt, mistakes happen. The attacker wins when that mistake gives them a publishing token or session cookie.

What the bad code did: how it went after wallets and web apps

Security companies say that the injected code was mostly about Web3 wallet interactions. It looked for wallet addresses and signatures in browser contexts and tried to send transactions to addresses that the attacker controlled. In developer environments, the payload also tried to steal tokens, build secrets, and do other things that could let it move sideways. That combination was meant to steal money quickly and to get into systems over time.

The payloads were small and well-planned, which helped them avoid being found quickly by some signature-based scanners. When bad code is inside a legitimate dependency, it becomes legitimate by association. This is why supply chain compromises are more dangerous than classic malware that comes as an email attachment.

Why this is important: the range of common dependencies

Because they are transitive dependencies, the packages in question get billions of downloads every week. Other libraries and frameworks include them, and then they include them for you. That means that one broken utility can have an effect on a lot of different programs. The total number of downloads for the week was in the billions, which shows how big and urgent the need for fixing was.

You might think that only “big” packages are dangerous. The truth is the opposite: small, widely used utilities increase risk because everyone uses them. The dependency graph is like a spider web: when one strand breaks, the vibrations spread far beyond the place where the damage happened.

Who is most at risk: users, projects, and businesses

Solo developers and small teams that depend on automatic updates are at risk because they often accept new versions without checking them carefully. If they don’t gate transitive updates, big companies with a lot of automation and continuous deployment can also be hit at scale. If your frontend code talks to cryptocurrency wallets or Web3 primitives, you should think of this as a very risky situation. If wallet interactions are redirected or signed transactions are intercepted, these projects could lose money right away.

Developers who keep tokens in local environments or rely on credentials with a wide scope are at even more risk. A single leaked token from a developer’s laptop or CI system is enough for attackers to start pivoting. This is why credential hygiene is important for both individuals and businesses.

Tools and signs to check if your project is affected

Begin with your lockfile. Find the exact version strings that were released during the incident window and check them against your lockfile or package lock. Use dependency scanning tools, vendor advisories, and public IoC lists to find versions that have been flagged. A lot of security companies released indicators and scanning signatures that make this quick.

In addition to checking the versions, scan your built bundles. Malicious wallet hijackers often leave behind telltale strings or make strange network calls while the program is running. If your frontend signs or prepares blockchain transactions, use a throwaway wallet to do an isolated end-to-end test to make sure the transactions go where you want them to.

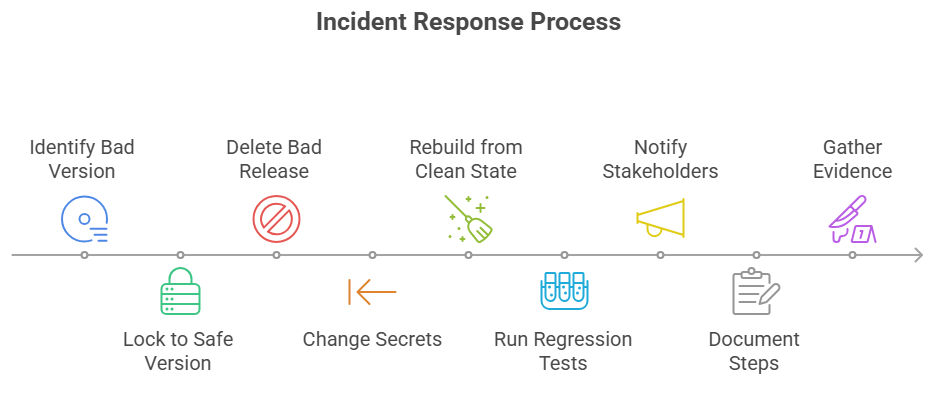

Quick steps to fix the problem: a short checklist for responding to an incident

If you find a bad version in your dependency tree, lock your project to a safe version and delete the bad release from any CI caches and artifact stores. Change any secrets that may have been in builds or on developer machines. Rebuild from a known clean state and run regression tests to make sure everything works as expected. Vendors and hosting platforms also put out detailed playbooks for keeping things under control.

Tell all internal stakeholders, such as security and product teams, and keep track of the steps taken to fix the problem in a post-incident review. It’s important to gather evidence. Make a note of when the bad version got into your builds and what systems may have accessed the exposed credentials so you can plan what to do next.

Lockfiles, pinning, and audits are the best ways to keep your dependencies clean

To avoid surprises, use lockfiles and builds that can be repeated. In production builds, it’s better to use fixed versions so that new transitive updates don’t automatically go into the code that is already deployed. Add an approval step for dependencies for critical services so that a person can look over changes to important dependency trees before they go live. That friction makes attacks take longer and gives you time to see unusual version bumps.

Run automated dependency audits on a regular basis and add manual reviews every now and then. Automation can quickly find known bad versions, but a human review can find problems that are specific to the situation, like a small utility suddenly making an extra network call that changes how the program runs.

Two-factor authentication, tokens, and the least privilege are all ways to keep maintainer accounts safe

Maintainers should treat publishing tokens like keys to a vault. When you can, use two-factor authentication that is backed by hardware. Change your tokens often, and don’t use the same token for more than one service. Don’t copy and paste session tokens into web forms unless you are 100% sure of where they came from and have checked the domain by hand.

Publishing rights should be moved to accounts controlled by the organization with very specific permissions. For CI workflows, use temporary tokens and limit which machines or IPs can publish. Allow publish time checks when you can so that a second person can approve big changes.

CI/CD and build supply chain protections: safe pipelines

Make your CI stronger by caching trusted artifacts and looking for unexpected calls and signatures in build outputs. Adding a strong integrity check by signing artifacts and checking signatures before deployment is a good idea. Also, keep secrets to a minimum in build environments and use short-lived credentials so that an attacker who gets in for a short time doesn’t get much out of it.

Consider CI runners to be production hosts. If runners have easy access to credentials, a compromised build can leak secrets to a lot of people. Limit who can see the secret and check the build logs for strange behavior, like strange outbound connections during builds.

When to tell users and stakeholders: openness and honesty

If the compromise could affect customers, write a simple advisory that explains the problem, lists the affected versions, and outlines the steps that need to be taken to fix it. Work with the registry, major vendors, and security researchers to make sure that the message is correct and gets out to a lot of people.

Give downstream projects timelines and signs of compromise so they can check their own environments. Clear, honest communication makes things less confusing and helps the ecosystem move from panic to fixing things in a smooth way.

Things the open-source community should learn: trust, mentorship, and money

Open source works because people give their time and resources for free, but that also means that a few maintainers are in charge of important parts of the infrastructure. This incident serves as a reminder that maintainers must be given money and help with their work. Companies should pay for security reviews, give maintainers access to safer publishing models, and help newer maintainers learn how to work in a secure way.

Contributors can help by looking over changes, sponsoring maintainers, and making tools that make things easier for everyone. When people work together, the chances of one successful phishing message causing a lot of damage go down.

What to do right now: practical next steps and takeaways

Check your repositories and CI artifacts for the flagged versions, and either delete or pin any hacked npm packages you find. Rotate tokens and secrets that were in builds, and then rebuild from trusted states. Add a short checklist to your onboarding and release playbooks so that teams are less likely to accept risky transitive updates in the future.

If you keep packages up to date, make your publishing process more secure, and ask for help when you need it. If you use packages, think of dependencies as risks instead of conveniences. Small, consistent habits like version pinning, reproducible builds, and a social culture that helps maintainers instead of leaving them alone when the next supply chain shock hits are the best defense.

The npm package breach proves how risky supply chain attacks can be. Hoplon Infosec’s Web Application Security Testing helps identify hidden flaws in your apps and dependencies, keeping your software supply chain secure.

Follow us on X (Twitter) and LinkedIn for more cybersecurity news and updates. Stay connected on YouTube, Facebook, and Instagram as well. At Hoplon Infosec, we’re committed to securing your digital world.

Share this :