ISO 42001: Master Responsible and Trustworthy AI for Your Business

Hoplon InfoSec

11 Oct, 2025

What does ISO 42001 mean?

There is a small revolution happening in boardrooms all across the world. People used to care about cybersecurity and data protection, but today they just talk about how to use AI properly. The ISO 42001 AI management service is what you need.

ISO 42001 is a set of rules that enterprises may use to make sure they use AI in a responsible, open, and well-documented way. It doesn't tell you what kind of technology to employ. Instead, it shows you how to utilize that technology in a way that is safe, moral, and helpful. The same ideas about how to run things that made ISO 27001 the best standard for information security in the world are now being utilized for AI.

This new standard explains what is needed for continuing improvement, leadership commitment, policies, and lifecycle management. Not only for big tech. The ISO 42001 AI management solution lets you link innovation with accountability, no matter what field you're in, including healthcare, retail, or finance.

Why ISO 42001 is Important Now Now

AI is no longer only used in research labs. It's in charge of recruiting people, giving out loans, and even producing marketing material. But as capacities rise, so do concerns. Customers and regulators are both raising serious questions about safety, bias, and how things work.

The ISO 42001 AI management solution gives businesses the tools they need to answer those questions with certainty. It helps you prove that your AI is not just powerful, but also managed, checked, and in line with human values. This kind of proof is no longer optional in a world where one mistake may break trust. It provides you an advantage over your rivals.

Basic Ideas and Layout

The structure of ISO 42001 is simple to follow. Like other management standards, it is built on leadership, planning, support, operation, and improvement. The distinction is in what it looks at. It doesn't only look at security controls; it also looks at things like governance, fairness, openness, and risk across the life of the system.

When a business signs up for the ISO 42001 AI management service, it pledges to learn everything about its AI systems, from how they were made to how they are used to how they will be disposed of. That understanding of the lifecycle makes sure that supervision doesn't stop when a model is released. It keeps going, learning and developing as the tech does.

How big and useful it is

You would think that this rule only applies to huge IT corporations, but that's not the case. ISO 42001 was made to go bigger. The same rules apply to both building a chatbot and employing predictive analytics for logistics.

The fact that the ISO 42001 AI management service can be updated is its best feature. You may utilize it on the portions of your business that actually need or use AI, and then add more as you go. Many companies start with one project or department, learn from it, and then grow it to other aspects of the organization.

Important Rules and Controls

Like all ISO standards, ISO 42001 incorporates specific provisions and regulations. These assist you figure out what to do in real life, such laying down rules, keeping an eye on performance, and working out hazards.

Some of the most crucial portions are about being fair, open, and responsible. They urge you to think about who your system affects, how decisions are made, and what you'll do if something goes wrong. Following these standards isn't about making things more complicated. It's about making things easy to find being able to show, not just tell, how your AI works. Managing Risk The risk of AI changes with time as it goes through its life cycle. Things that seem safe now could not be safe tomorrow. The ISO 42001 AI management service advises businesses that risk is a process that varies over time, not something that needs to be looked at once.

For example, you might have a model that works fine in testing, but then six months later you see that it behaves differently because users have changed how they use it. At this point, lifecycle risk management is quite critical. Using continuous monitoring, businesses may detect these changes early, retrain models in a responsible way, and keep tiny problems from becoming huge ones.

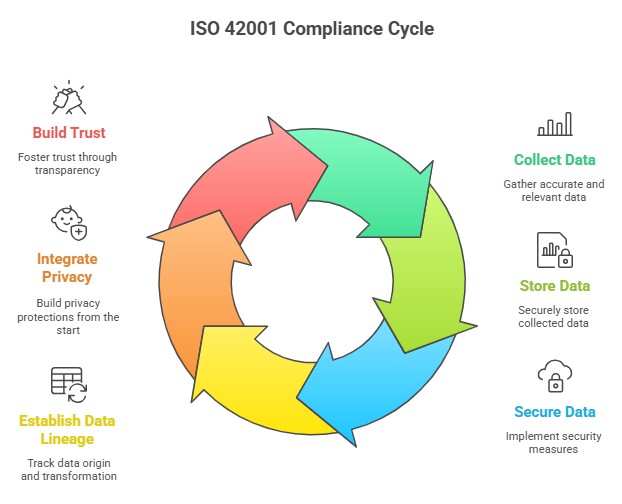

Managing Data, Privacy, and Security

AI is built on data, so if that data is inaccurate, everything else built on it is too. ISO 42001 places a strong emphasis on how companies collect, store, and secure data. It encourages explicit data lineage, which is understanding exactly where your data came from and how it was made.

You need to consider about privacy from the beginning if you want to do a decent job of setting up an ISO 42001 AI management service. It's not enough to only protect client data; you also have to be honest with the company. When privacy protections are built in from the outset, people naturally trust and follow the rules.

Being fair, transparent, and able to explain things

To be honest, not every AI choice is clear. But today trust is based on being candid. The guideline stipulates that companies should set down what a model accomplishes, why they chose it, and how to question it if it doesn't operate as planned.

One of the companies I worked with had a recommendation system that favored particular locations for no evident reason. They did a fairness review using ISO 42001 guidelines and detected a bias in their training data that was not obvious. Fixing it made things more equitable and made customers happier. This framework lets you know where you might be missing anything before it becomes news.

Roles, Duties, and Management

When everyone knows who is in charge and who owns what, AI governance works best. The ISO 42001 AI management service specifies that everyone, not just developers, should know what their job is. This comprises executives, managers, and data owners.

In real life, this entails setting up a governance board or group that meets on a regular basis. They check impact evaluations, give the green light for deployments, and make sure that timelines don't get in the way of ethical considerations. This structure makes sure that being responsible and coming up with new ideas go side in hand.

Handling merchants and third parties

Not many companies create anything themselves these days. They get their APIs, models, and data from vendors. But that makes you dependant, which could be bad.

The ISO 42001 AI management service is aware of this. It tells companies to run their enterprises from beyond their own gates. That entails checking to see how other people do things, making sure that contracts protect you, and making sure that vendors follow the same rules of conduct. After all, the weakest link in your supply chain is what makes your system powerful.

Plan for Action

It could seem hard to follow the standard, but the best companies take it one step at a time. Begin with a little bit. Choose one system that really could be dangerous and utilize it as a test case. Put your procedures down on paper, look for any gaps, and improve them.

When that pilot is ready, look at more than just that. Every day, establish templates for impact evaluations, a checklist for data governance, and tools for monitoring. Over time, your ISO 42001 framework will become a part of your company's DNA. It won't be an added job; it will just be a normal part of how you make and use AI.

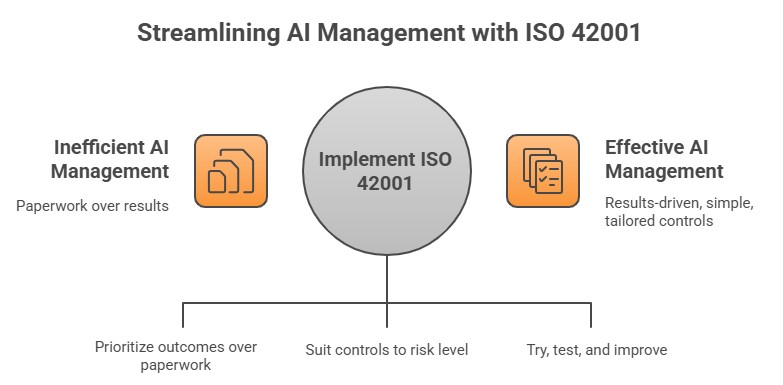

Things You Shouldn't Do

People often make the mistake of thinking that the standard is a tool to keep track of things. Just because you fill out documents doesn't imply you are in command. You should apply such concepts to create genuine decisions, though. Another problem is over-engineering the system by making too many checklists and reviews, which discourages fresh ideas from coming up.

A good ISO 42001 AI management solution puts results ahead of paperwork. You don't want controls that work for everyone; you want controls that are suited for the level of danger. Make it simple, try it out, and make it better as you go.

Help with tools and services

There are now more and more tools and services that assist individuals use ISO 42001. These include risk assessment tools and governance dashboards that make it easy to report. Many consulting companies also offer training programs or assessments of preparation for their own workers.

What matters most is integration. Tools are there to help you accomplish your task, not take over. Automation can collect evidence, find strange things, and make audits easier, but good governance still relies on people making decisions.

How to Get Certified and Audited

You can obtain certified when your system is ready. The steps are easy to follow, but they are very thorough. Auditors will look for evidence of ongoing progress, risk evaluations, and participation from leaders. They'll want to know that the guidelines you created genuinely change how your teams make and use AI.

You don't have to be faultless to get accredited under the ISO 42001 AI management service. It's about proving that you can be in charge and stay the same. Companies who do it typically discover that the discipline needed pays off in ways that go beyond the audit. It makes everyone responsible, which makes every project better.

Good things for businesses and the future

ISO 42001 is more than just something that businesses have to do; it also helps them in genuine ways. It shows investors, customers, and partners that you care about your duties. It makes it easier to buy items, especially when big clients want to see confirmation of good governance. And it helps people stay on task by making their jobs and performance goals explicit.

Trust is the most crucial thing in a market where reputations may change in a day. Businesses may turn responsibility into trust and trust into long-term value by using the ISO 42001 AI management service.

Final Thoughts

AI is affecting more than just businesses; it's also influencing how people trust each other. People want to believe that the technology that controls their lives is fair, safe, and straightforward to grasp. ISO 42001 tells you how to establish that conviction step by step.

If your business is still wondering about whether or not to use the ISO 42001 AI management solution, start small but do it immediately. The more you include responsible governance in your process, the easier it gets to grow. You will come to understand that following the rules is not the final aim; it is the foundation for long-lasting creativity.

Hoplon Infosec helps organizations implement and audit ISO 42001 through its ISO Certification and AI Management System service. Their experts design risk models, AI lifecycle plans, and governance frameworks to ensure responsible AI use and a strong competitive edge.

Explore our main services:

For more services, go to our homepage.

Share this :