Microsoft Stops Vanilla Tempest Fake Teams Attack

Hoplon InfoSec

16 Oct, 2025

You want to join a meeting on Microsoft Teams, but you end up on a page that looks just like the one where you can download Microsoft Teams. You click on what you think is the official app, install it, and give a hacker a permanent key to your computer. Security teams recently stopped that exact nightmare, and the fix wasn't a big code change or a new patch.

It was a matter of paperwork and trust: taking away the digital signatures the attackers used. Welcome to a world where certificates are just as important as code.

The campaign that seemed like a normal download

Attackers have known for a long time that people trust things they know. A shiny installer badge, a logo people know, and a download page that looks like it's made of butter all make people less likely to be on guard. The group kept an eye on Vanilla Tempest and used that information to their advantage by making fake Microsoft Teams installers that looked completely real.

They put these fake installers on sites that were good at showing up in search results and in malvertising that led victims to the trap. The malware inside the file, which is known as the Oyster backdoor, gave attackers constant access when users installed it. People who were really hurt were caught because they believed what they saw.

This method isn't new, but it works. SEO poisoning and targeted ads moved the bad download pages up in search results so that many people found them before the official Microsoft download URL. Once the backdoor was set up, attackers could move sideways, get credentials, and even set up ransomware attacks in some cases. The campaign was especially scary for defenders because it used social engineering, search manipulation, and signed installers.

Why signing code made the attack worse

Signing code is meant to show that you can trust it. Operating systems and security tools treat a file differently when it has a valid certificate on it. That is why attackers work hard to get real certificates or break into signing systems: Signed malware gets fewer warning signs from users and some security products.

Attackers signed their fake Teams installers with certificates that were really issued. This happened in the Vanilla Tempest wave. Those signatures made it less likely that the victims would be caught and made them think they were installing a real client. For defenders, finding a signed malicious binary is bad news because it shows that people are abusing trust in the software distribution system.

Microsoft's answer is to revoke, disrupt, and protect.

Microsoft didn't just rely on detection rules; they went after the certificates, which were the main cause of the trust problem. The company found hundreds of certificates that the campaign used and took them back. That action caused the signed malicious files to lose their trusted status in Windows and the Microsoft security ecosystem. The attackers' chain of trust quickly fell apart, making it harder for the bad installers to spread and for victims to be tricked. Microsoft also added detections in Defender and telemetry to stop the Oyster backdoor and other related payloads.

Revocation isn't pretty. It is an administrative move that happens behind the scenes and involves gathering evidence, working with certificate authorities, and changing blocklists. But if done right, it takes away a key advantage of the attacker: a real signature. People who get alerts feel the effect right away. Files that used to open with an official seal now give security warnings or don't validate at all.

What defenders learned and what organizations should do

This event teaches us a lot of useful and actionable things. First, don't put all your faith in one trust signal. A signed installer doesn't mean that it is safe for sure. Second, what real users do matters. People will click on search results and ads, so it's important to make official channels easy to find and to teach employees how to check URLs and use official vendor portals.

On a technical level, businesses should keep an eye out for signed binaries that show up on endpoints and in telemetry that they didn't expect. Use application allowlisting when you can, and set up endpoint detection to flag installations that come from strange domains or through installer schemes.

Use code integrity tools to check signatures and set up alerts when executables in your environment are signed with new or rarely seen certificates. These steps help find abuse more quickly.

The human side: how one click can cause a lot of problems

I once sat down with an administrator at a hospital that had been hit by a similar social engineering chain. She said that at that moment, a staff member downloaded what they thought was a patch, and within days they noticed that systems were slowing down, backups were failing, and everyone was rushing to contain the problem. That story shows how one small mistake, often made while doing routine tasks, can lead to mission-critical failures.

The Vanilla Tempest event happened in the same way. A search result that looked like it could be trusted, a user who was trying to do their job, and an installer who looked like they could be trusted. When attackers are good at being convincing and blending in, they don't need zero days or strange exploits. That's why trust tools like certificates need to be used with user training and clear rules for how things work.

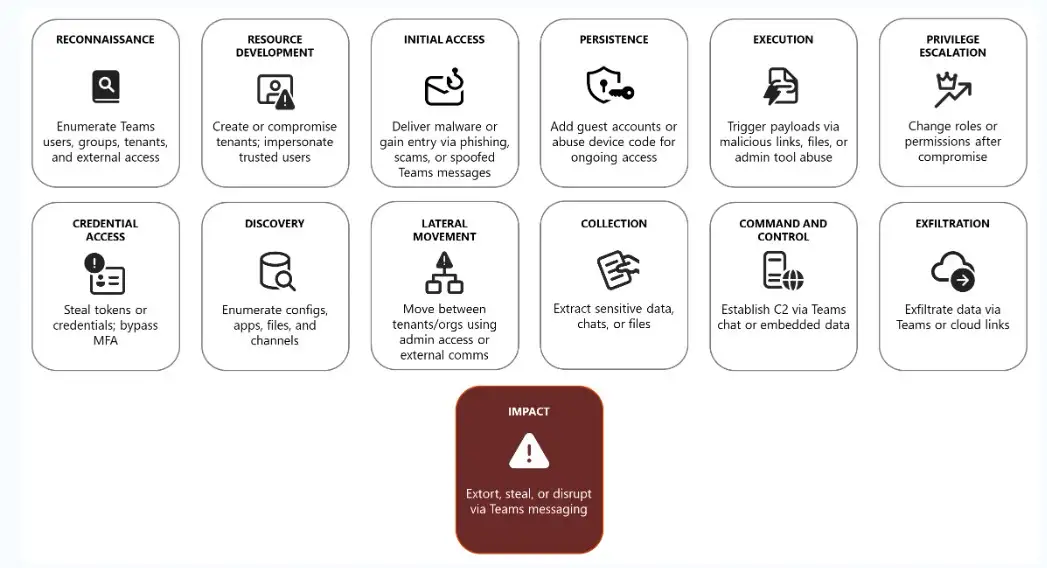

Attack Chain

Source:microsoft

Attackers use a set of steps that they know will work to break into Microsoft Teams. First, they quietly figure out who works for a company and who is on a team. Then, they create fake or impersonated accounts to make it look like they are real. After that, they send phishing links, fake Teams files, or messages that contain malware.

Once they get in, they make sure they don't get kicked out by adding guest accounts, registering devices, or using device codes to reconnect. They use infected files or admin tools to run harmful code and try to get more privileges to gain more control.

When they get deeper access, they steal passwords, look through internal apps and file shares, and move between machines. They often use Teams itself to give orders and control things, to pass on stolen goods, and finally to move valuable data out through cloud links. The result was lost sensitive information, threats of extortion, and broken collaboration workflows.

Could attackers change? What's next?

When defenders close one door, attackers look for another. Taking away certificates makes things more expensive for the enemy, but it doesn't stop the threat. They might switch to new certificates, use stolen code signing processes, or rely more on social engineering and manipulating the supply chain.

Defenders should be ready for a never-ending game of cat and mouse. The difference now is that the security community has shown how to do things: find the abused trust signals and get rid of them. At the same time, platform vendors are reminded of the role they play in policing signing authorities, and security teams must keep watch on weak spots that remain.

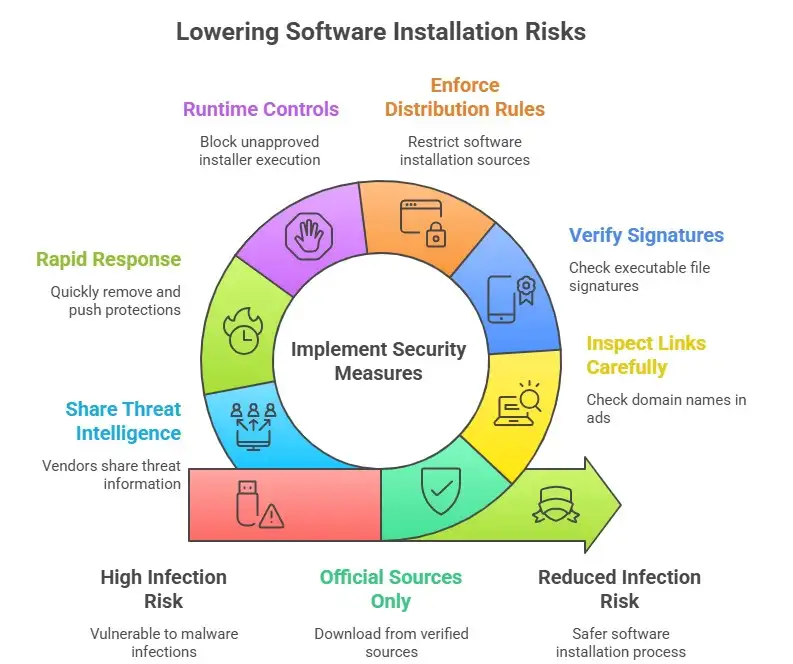

How to tell a fake installer from a real one and stay safe

There are easy things you can do to lower your risk a lot.

First, always get software from the official website of the company or a store that has been verified. If you have to search, use bookmarked or directly typed addresses instead.

Second, move your mouse over links in ads and look closely at the domain names.

Third, if you're not sure, check the signatures by right-clicking on an executable, going to properties, and looking at the signer. Don't run the file if the signer looks strange or the certificate chain is strange.

For businesses, make sure that software distribution rules are followed. Let users install only from trusted sources. Add controls at runtime that stop installers that aren't approved from running. These simple but effective steps would have stopped a lot of the infections that happened in the Vanilla Tempest campaign.

In the big picture, trust, ecosystem responsibility, and quick action are important.

This event also serves as a reminder that we all have a part to play in keeping things safe. Certificate authorities, platform vendors, search engines, and advertisers all have a part to play.

Microsoft's decision to revoke shows that platform owners can and should do something when trust mechanisms are used as weapons. Also, certificate authorities need to be better at finding fraud so that they don't issue certificates to people who shouldn't have them in the first place.

It's important to respond quickly. The longer it takes a vendor to find, remove, and push protections, the more time attackers have to make money. For these kinds of situations, the industry needs clear playbooks and better ways for vendors and operators to share threat intelligence. That group approach lowers the risk of exposure and helps stop campaigns before they get too big.

A short list of things teams need to do today

• Make sure that only certain applications can run on important systems.

• Keep an eye out for new or rare code signing certificates.

• Make it clear to users where they can officially download things.

• Teach employees to check URLs and not click on ads to download things.

• Keep endpoint detection up to date for activity from installers from domains that aren't standard.

These steps are useful, don't need any special tools, and will make it harder for attackers to use the most common methods used in campaigns like the one run by the Vanilla Tempest group.

Final thought

In modern cybersecurity, the lines of battle are sometimes less about better exploits and more about trust. Attackers want to use the things we rely on every day, like signed code, search results, and names we trust, against us. The recent disruption of this campaign shows that when defenders act quickly to get rid of false trust signals, the balance of power changes. That's great news. It also reminds us that trust is something we should protect, keep an eye on, and sometimes take away.

Remember this: a certificate does not mean you are safe. Check the sources, lock down the installation paths, and be very careful with signed files. That's how to stay one step ahead of a threat that looks like the software you use every day.

The Vanilla Tempest attack is a reminder that even trusted software can be turned against users. Hoplon Infosec’s Endpoint Security service helps prevent that by detecting malicious installers, blocking fake updates, and monitoring abnormal activity across devices in real time. With strong endpoint protection, organizations can stop threats like these before they spread.

Follow us on X (Twitter) and LinkedIn for more cybersecurity news and updates. Stay connected on YouTube, Facebook, and Instagram as well.

Share this :