EY Data Leak: 4TB SQL Server Backup Exposed on Azure

Hoplon InfoSec

30 Oct, 2025

It’s not every day you come across a corporate data misstep so big it shakes faith in the cloud. Yet that is exactly what happened when the Ernst & Young (EY) data leak emerged. A 4‑terabyte SQL Server backup file sitting exposed on Microsoft Azure, accessible to anyone who could hit the URL. For a firm that audits giant corporations, advises on deals, and handles critical financial data, this was akin to leaving the keys and the vault door open at the same time.

In this article, I’ll walk you through what the EY data leak means, how it happened, what it reveals about cloud risk, and what lessons every organization should learn. This story is more than a cautionary tale; it’s a chance to reflect on how even top-tier outfits can trip over simple misconfigurations and how you can avoid the same fate.

What Happened: The Exposure Unveiled

In late October 2025, a cybersecurity research team from Neo Security discovered an enormous file on Azure: a backup (.BAK) of an SQL Server database, reportedly 4 terabytes in size and unencrypted, publicly accessible on the internet.

The naming and “magic bytes” scanned from the first 1,000 bytes of the file confirmed it was an SQL Server backup and dangerously so, because those backups contain far more than tables and indexes. They hold credentials, session tokens, API keys, and service account passwords. One researcher said the discovery was “like finding the master blueprint and the physical keys to a vault.”

Because the backup sat exposed, it became almost certain that it was indexed by scanners or crawlers automatically sniffing unsecured buckets or containers. In this context, the EY data leak was not a typical breach via ransomware or malicious intrusion; it was a misconfiguration exposure.

Why This Matters: The Stakes Involved

When you hear “EY data leak,” your mind might wander to financial statements, audit files, or client data. That’s not far off. EY, as one of the “Big Four” accounting and advisory firms, handles sensitive finance, mergers & acquisitions, audit records, and strategic corporate secrets. The exposure of a 4 TB SQL Server backup means some or many of those secrets could have been inside.

In practice, this means:

· Credentials for corporate systems, cloud services, and APIs could be in that backup.

· Client data belonging to major corporations or governments could be exposed.

· Intellectual property, audit methodologies, and internal tooling could be readable.

That level of exposure undermines trust and raises questions about how mature cloud security practices are even at large firms.

_compressed-20251029225549.webp)

How It Happened: Misconfiguration + Scale = Risk

The investigation by Neo Security lays open how this kind of exposure happened despite all the controls you would expect at EY. Let’s unpack:

1. Cloud misconfiguration

The file apparently sat in an Azure Blob Storage container or similar public access point, set in a way that allowed access via HEAD requests. The researcher’s initial hit was:

HEAD https://…/file.bak

Content‑Length: 4 000 000 000 000 bytes

That massive size raised alarms.

Misconfigurations like “storage account allows public read” or “shared access signature (SAS) expired/unrestricted” are classic root causes.

2. Unencrypted data

Th. The BAK file was unencrypted. That means anyone able to download or access it could restore it or extract secrets. Encryption at rest or encryption for the backup might have prevented or mitigated this. Without that, the EY data leak would have had far greater severity.

3. Lack of asset visibility

If a 4 TB backup can sit publicly for hours or days without detection, it suggests visibility gaps. The firm did not know, or did not act fast enough, knowing that such a large asset was exposed. In Neo Security’s words: “You cannot defend what you do not know you own.”

4. Scale adds complexity.

Backing up large databases to the cloud is normal. But as scale grows, so do the risks. With 4 TB of data, the exposure window and the blast radius both expand. The EY data leak illustrates how even well‑resourced teams can slip.

Timeline & Discovery

· The EY data leak was first publicly reported on 29 October 2025.

· Researchers from Neo Security were doing “attack surface mapping” rather than loud scans. They stumbled on the file through a passive observation of a HEAD request returning a huge file.

· The exact duration of exposure is unknown; it might have been minutes, hours, or days. That unknown window is itself a major risk.

· After discovery, responsible disclosure presumably triggered remediation efforts, though public details of time‑to‑remediate are scant.

What the EY Data Leak Shows Us About Cloud Risk

This incident, while dramatic, is not isolated. But its scale and the brand involved make it especially instructive.

The cloud is not automatically safe.

Moving to Azure, AWS, or Google Cloud does not mean “set it and forget it.” You still need configuration hygiene, access controls, monitoring, asset inventory, and proactive audits. EY was using Azure and yet exposed a 4 TB backup publicly.

Automated scanning by attackers is real.

The researchers pointed out that automated scanners constantly sweep the internet, find open buckets, exposed backups, and misconfigured endpoints, and download them if possible. The EY data leak underscores that exposure often means compromise.

Visibility and governance matter.

Large organizations often have legacy systems, multiple teams, rapid deployments, and Terraform/CI‑CD pipelines. This creates blind spots. With something as large as a 4 TB backup, you need auto‑discovery of what is exposed and fast remediation pipelines.

Backup data needs protection, too.

Often, we focus on production systems, live data, and network intrusion. We neglect backups. But backups are high value: they contain full data sets, credentials, and internal components. The EY data leak reminds us that a backup is just as sensitive as the live system, and in some cases, more.

What Could Have Been Inside That 4 TB Backup

While EY has not publicly detailed exactly what the backup included, research reports indicate likely contents:

· API keys, session tokens, and service‑account passwords that applications rely on.

· User credentials and cached authentication tokens.

· Possibly audit logs, internal tooling data, and client engagement records.

· Very sensitive, possibly regulated data for clients of EY.

If one thinks of a database backup as a digital “time capsule” of everything the application held, then the sheer volume (4 TB) hints at many years, many systems rolled into one.

Immediate Impact for EY & Stakeholders

For EY, the reputational hit is significant. A data advisory/audit firm is expected to have rock‑solid controls. The fact that a 4 TB backup was publicly exposed raises questions from clients, regulators, and partners.

For clients and third parties whose data may be in that backup, the concern is: was their sensitive information at risk? Was it accessed? Who downloaded it? What downstream impact could occur from credential reuse attacks, insider threats, or supply‑chain risk?

For the wider industry, the EY data leak is a loud wake‑up call: if EY can do this, any organization can.

Lessons Learned & Best Practices

Now let’s shift from “what happened” to “what you should do” if you’re responsible for cloud infrastructure, backups, and data protection.

Asset Discovery and Attack Surface Management

You need continuous discovery of what is visible externally. Tools that show “what do attackers see” help. In the case of the EY data leak, the exposure was found during passive mapping. Don’t rely only on internal audits; assume external scanners are looking at your assets.

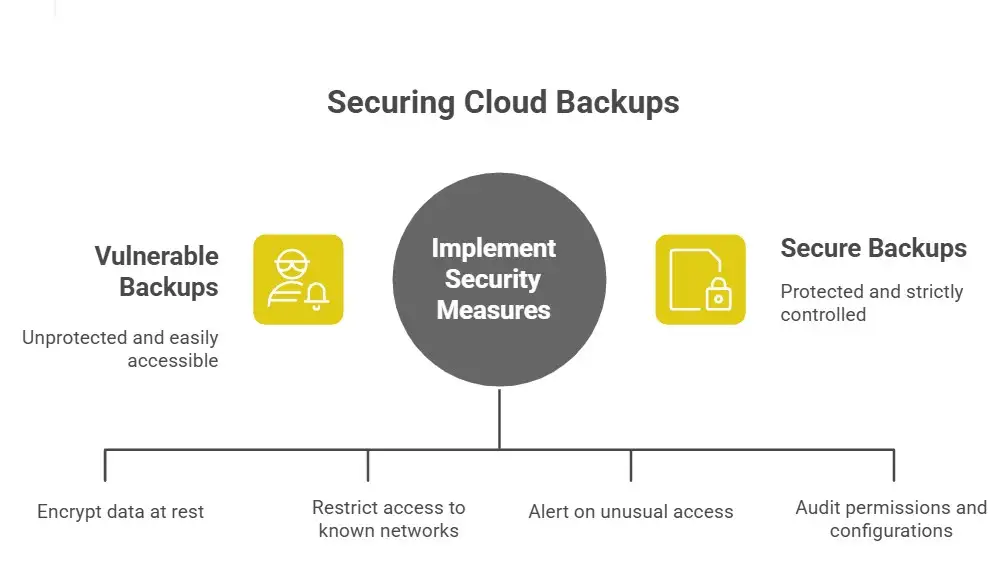

Backup Encryption, Access Controls, and Safe Storage

Backups should be encrypted at rest and in transit. Access to storage buckets or containers should be restricted to known networks or identities. Make sure SAS tokens, public URLs, and container permissions are locked down. A misconfigured container is a threat.

Least Privilege and Segmentation

Ensure that backups are stored in dedicated accounts or containers separate from the main environment, with strict permissions. If an identity is compromised, it should not allow full access to massive backup stores.

Monitor Unusual Access Patterns

Downloading a 4 TB file is unusual. Alert on large data egress, odd head requests, and large blob size exposures. Set thresholds and alarms so that when something too large is accessible, you know quickly.

Regular Cloud‑Configuration Reviews

Cloud environments evolve fast. Remove unused subsystems, audit storage account permissions, and check that public access is disabled unless explicitly required. With encryption, retention policies, and governance frameworks. The EY data leak shows that even big firms slip up.

Treat Backups as Sensitive Data Too

We often treat backups as “just a copy” and store them for disaster recovery. But treat them as active attack surfaces. They contain full data sets and are often ignored by protection tools. The EY data leak exposes that oversight.

My Take: Why This Feels Worse Than Many Breaches

Here’s why the EY data leak resonated with me: It wasn’t a clever hacker exploit or advanced persistent threat. It was a configuration gaffe. A 4 TB file sitting in the open. For me, that says the ecosystem is fractured: we have advanced cloud infrastructure, but the tools, processes, and accountability are still catching up.

It’s like building a super‑highway and leaving an open gate in the fencing. Anyone can drive in. The technology is there, the principle is sound, but the final guardrail is missing. And when the guardrail fails, even giants like EY can stumble.

What to superhighwayy Watch Going Forward.

For organizations reading this, pay attention to these signals:

· If you’re migrating large datasets or backups to the cloud (Azure, AWS, GCP), ask: what are the access controls on the storage? Are backups encrypted and access tokenized?

· Monitor whether any large files or containers are publicly addressable. Automated queries for “HEAD request returns 200 and blob size > x bytes” will help catch exposures.

· Recognize that regulatory bodies will examine how you handle cloud backups, not just live data. Don’t treat backups as second‑class assets.

· Assume external automation is scanning your posture. That means exposure windows must be minimized; even minutes count.

· Use “backup” as a trigger for review: when you take a backup, run a checklist on access, encryption, retention, location, and people permissions.

Takeaway and Action

The EY data leak is a stark reminder that even the most capable firms can fall victim to basic configuration errors in the cloud. The 4 TB SQL Server backup sitting publicly exposed on Azure isn’t just a statistic; it’s a wake-up call for all of us. If EY can slip, any organization can.

Frequently Asked Questions (FAQ) About the EY Data Leak

1. What is the EY data leak?

The EY data leak refers to a 4-terabyte SQL Server backup file from Ernst & Young that was publicly accessible on Microsoft Azure. This exposure allowed anyone with the link or access to the cloud storage to potentially view sensitive data. It was not a hack but a misconfiguration issue, making it a severe risk for both EY and its clients.

2. How was the EY data leak discovered?

Cybersecurity researchers from Neo Security discovered the exposed backup during passive scanning. The file was identified as an SQL Server backup due to its structure and content. Its massive size and public accessibility immediately raised alarms, highlighting a misconfiguration on EY’s cloud storage.

3. What type of data could have been exposed?

The backup likely contained highly sensitive information, such as:

· Credentials and session tokens

· API keys and service account passwords

· Audit logs and client engagement records

· Internal corporate tools and methodologies

While EY has not disclosed the exact contents, the sheer size of the backup suggests a broad range of sensitive material.

4. Was the EY data leak caused by a hack?

No, the EY data leak was caused by a misconfiguration in cloud storage, not a malicious intrusion. The backup file was publicly accessible due to insufficient access restrictions and a lack of encryption, making it vulnerable to anyone who stumbled upon it.

5. How long was the data exposed?

The exact duration of exposure is unknown. The backup could have been publicly accessible for minutes, hours, or even days. This uncertainty increases the risk of the data being accessed or downloaded by unauthorized individuals.

You can also read these important cybersecurity news articles on our website.

· Windows Fix,

For more, please visit our Homepage and follow us on X (Twitter) and LinkedIn for more cybersecurity news and updates. Stay connected on YouTube, Facebook, and Instagram as well. At Hoplon Infosec, we’re committed to securing your digital world.

Share this :