Google Vertex AI Vulnerability: IAM Risk & Privilege Escalation

Hoplon InfoSec

19 Jan, 2026

Can a low-privileged Google Cloud user really gain access to powerful service agent roles inside Vertex AI, and if so, why does it matter today?

As of January 18, 2026, security researchers are discussing a reported Google Vertex AI vulnerability that may allow certain low-privileged users to escalate permissions through Identity and Access Management behavior. While no official CVE has been confirmed and Google has not labeled this as an active exploit, the risk discussion itself is important. Vertex AI is used by thousands of organizations to train, deploy, and manage machine learning models that touch sensitive data, production pipelines, and business-critical systems. Even a small gap in access control can ripple into something much larger.

According to reporting from CybersecurityNews, the concern centers on how Vertex AI service agent roles are assigned and inherited in certain cloud environments. This is not about a dramatic zero-day attack. It is about something quieter, and often more dangerous, configuration-driven privilege expansion.

Why this story feels familiar to cloud security teams

Cloud security incidents rarely start with malware anymore. They start with a role. A permission. A checkbox that seemed harmless at the time.

I have seen teams lock down virtual machines tightly, only to leave service accounts wide open because they felt abstract. Vertex AI lives right in that gray zone. It is an AI platform, but it is also deeply tied to storage, networking, logging, and deployment services. That blend increases the cloud IAM attack surface in subtle ways.

The reported Google Vertex AI vulnerability fits this pattern. It is less about breaking in and more about being allowed to walk further than expected once inside.

Understanding Vertex AI service agent roles in simple terms

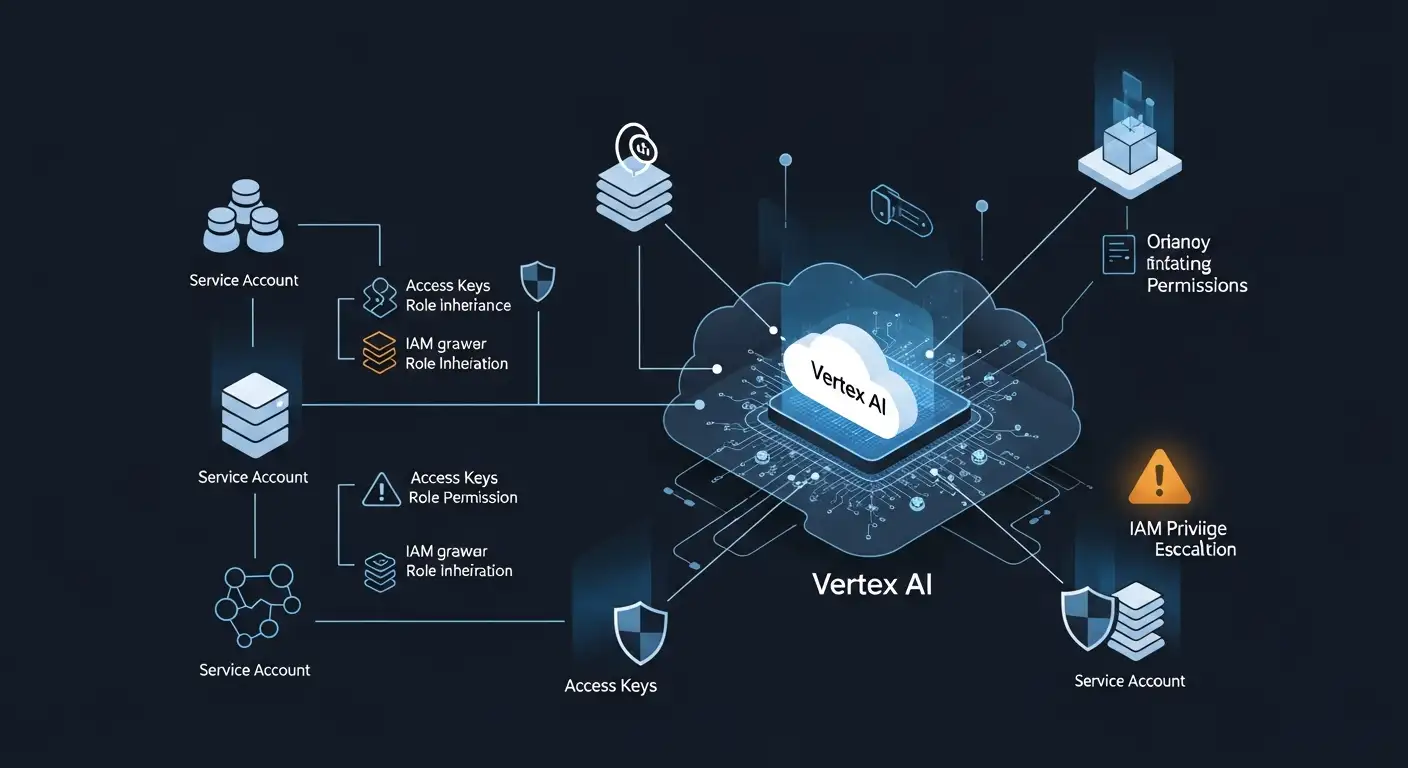

Vertex AI uses service agents to perform actions on behalf of users and projects. These agents are special Google-managed service accounts. They need permissions to spin up training jobs, read datasets, deploy models, and interact with other Google Cloud services.

In a perfect world, each service agent would have the least privilege needed. In reality, permissions often expand over time. Teams add roles to solve one deployment issue, then forget to roll them back. Over months, the role grows.

The issue described in this Google Vertex AI vulnerability discussion is that certain low-privileged users may be able to influence or trigger the creation or binding of these service agent roles in ways that exceed their intended scope. This does not automatically mean data theft. It means the door opens wider than expected.

Where IAM misconfiguration becomes a real risk

Google Cloud IAM is powerful, but it is also complex. Roles can be inherited at the organization, folder, or project level. A permission granted high up can quietly cascade downward.

Security teams often assume that low-privileged users are safe by default. That assumption breaks when service agents enter the picture. Service agents often carry broader permissions because they need to operate across services.

In the context of the Google Vertex AI vulnerability, researchers point out that permission chaining can occur. A user with limited rights may still be able to trigger workflows that result in service agent actions. Those actions may include access to storage buckets, model artifacts, or logs that contain sensitive information.

This is not confirmed exploitation. It is a realistic threat model.

Is this a vulnerability or a design risk?

This is where the conversation gets nuanced.

There is currently no public CVE tied to this Google Vertex AI vulnerability. Google has not issued an emergency advisory. That matters. It suggests this may fall into the category of misconfiguration risk rather than a software flaw.

However, misconfiguration is not harmless. Many of the largest cloud breaches in recent years were caused by permission errors, not bugs.

Security researchers often say misconfiguration is more dangerous than zero days. Zero days are rare and noisy. Misconfigurations are common and quiet.

Why AI platforms amplify IAM mistakes

AI platforms like Vertex AI introduce new workflows. Training jobs, pipelines, model registries, endpoints, and experiment tracking all need access to resources.

Each new workflow increases the number of permissions required. Each permission increases the blast radius if abused.

In this Google Vertex AI vulnerability discussion, the concern is not just about accessing Vertex AI. It is about what Vertex AI can access on your behalf. Storage. BigQuery datasets. Logs. Sometimes, even network resources.

That is why AI platform security risks deserve their own attention.

Real-world scenario that feels uncomfortably plausible

Imagine a mid-sized SaaS company. A junior engineer is given limited access to experiment with Vertex AI models. They can submit training jobs, but not manage IAM.

Over time, the team adds permissions so experiments stop failing. A storage role here. A logging role there. The service agent grows more powerful.

Now imagine that the engineer discovers a way to trigger a pipeline that deploys a model using a service agent with broader permissions. No exploit code. No malware. Just workflow abuse.

This is the type of scenario security teams worry about when discussing the Google Vertex AI vulnerability.

What we know and what remains unverified

It is important to be precise.

There is no public evidence of active exploitation in the wild. There is no confirmed CVE. Google has not acknowledged a security flaw.

The reporting highlights a potential privilege escalation pathway under certain IAM configurations. That means risk depends heavily on how an organization configures its environment.

In cybersecurity, uncertainty matters. This issue sits in the gray space between vulnerability and warning sign. That does not make it irrelevant. It makes it urgent to review.

Impact if abused at scale

If abused, the impact could range from minor to severe.

At the low end, attackers could access model metadata or logs. At the high end, they could manipulate training data, deploy altered models, or access sensitive datasets connected to AI pipelines.

For regulated industries like healthcare or finance, even limited data exposure can trigger compliance issues. That is why the Google Vertex AI vulnerability conversation is resonating across sectors.

Why least privilege keeps failing in cloud environments

Least privilege is easy to say and hard to maintain.

Teams move fast. Deadlines loom. Permissions get added temporarily and stay forever. Documentation lags behind reality.

Vertex AI adds another layer. MLOps teams often operate separately from cloud security teams. That separation creates blind spots.

This Google Vertex AI vulnerability discussion highlights those organizational gaps as much as technical ones.

How Google Cloud IAM inheritance complicates things

IAM inheritance is powerful, but it is also dangerous.

A role assigned at the project level may unintentionally grant access to new services introduced later. Vertex AI has evolved rapidly. Permissions that were safe two years ago may not be safe today.

Service agent permissions are particularly tricky because they are often created automatically. Security teams may not notice them unless actively monitoring.

That is a key lesson behind the Google Vertex AI vulnerability concern.

Mitigation strategies that actually help

Even without a confirmed vulnerability, there are clear steps organizations should take.

First, audit the Vertex AI service agent roles. Identify what permissions they actually need.

Second, review who can trigger pipelines, deployments, and training jobs. These actions often indirectly invoke service agent privileges.

Third, apply conditional IAM where possible. Time-based and context-aware restrictions reduce abuse potential.

These steps reduce exposure regardless of whether the Google Vertex AI vulnerability becomes a confirmed issue.

What Google says about securing Vertex AI

Google Cloud documentation emphasizes least privilege and regular IAM audits. It also recommends monitoring service account activity and using Cloud Asset Inventory to track changes.

Google has not labeled this issue a vulnerability. That transparency matters. It suggests the platform itself is functioning as designed, but design can still be misused.

Security is a shared responsibility. That theme runs quietly through every discussion of the Google Vertex AI vulnerability.

Why this matters for MLOps teams

MLOps teams focus on model performance. Security teams focus on access control. When those worlds do not overlap, problems grow.

This Google Vertex AI vulnerability conversation should push organizations to bring MLOps and security closer together. Shared reviews. Shared audits. Shared accountability.

Is Vertex AI still secure?

Yes, when configured correctly.

The presence of potential privilege escalation pathways does not mean Vertex AI is unsafe. It means it is powerful. Powerful systems require disciplined management.

That nuance is often lost in headlines, but it is essential when evaluating the Google Vertex AI vulnerability claims.

Frequently Asked Questions

Is Google Vertex AI secure for enterprise use?

Yes. When best practices are followed, Vertex AI remains a secure enterprise-grade platform.

Can IAM misconfiguration cause privilege escalation?

Yes. This is one of the most common cloud security risks across providers.

What are the service agent roles in Google Cloud?

They are Google-managed accounts that perform actions on behalf of services like Vertex AI.

How can organizations reduce IAM abuse risk?

Regular audits, least privilege enforcement, and monitoring service account activity help significantly.

Hoplon Insight Box

Security Recommendation from Hoplon

Organizations using Vertex AI should treat service agents as first-class identities. Monitor them. Audit them. Rotate permissions regularly. Misconfiguration is not a failure of tools. It is a failure of process.

The Google Vertex AI vulnerability discussion is not about panic. It is about awareness. Low privilege does not always mean low risk. AI platforms expand what cloud identities can do. That expansion demands stronger discipline, clearer ownership, and regular reviews.

Share this :