Copilot Studio Phishing Alert: New CoPhish Attack Exposed

Hoplon InfoSec

27 Oct, 2025

Imagine logging into a familiar service one you trust, on a domain you recognize and handing over access to your email, files, or calendar without realizing it. That’s exactly what’s happening with the latest attack vector targeting Copilot Studio: a technique dubbed CoPhish. In this article we’ll unpack how this attack works, why the threat is acute, and what organisations and users can do to secure their environments against token theft and malicious agents.

When companies adopt tools like Copilot Studio to boost productivity and automation, they often assume these platforms are safe by virtue of the vendor brand. But as the security researchers at Datadog Security Labs recently discovered, that very assumption can be weaponised.

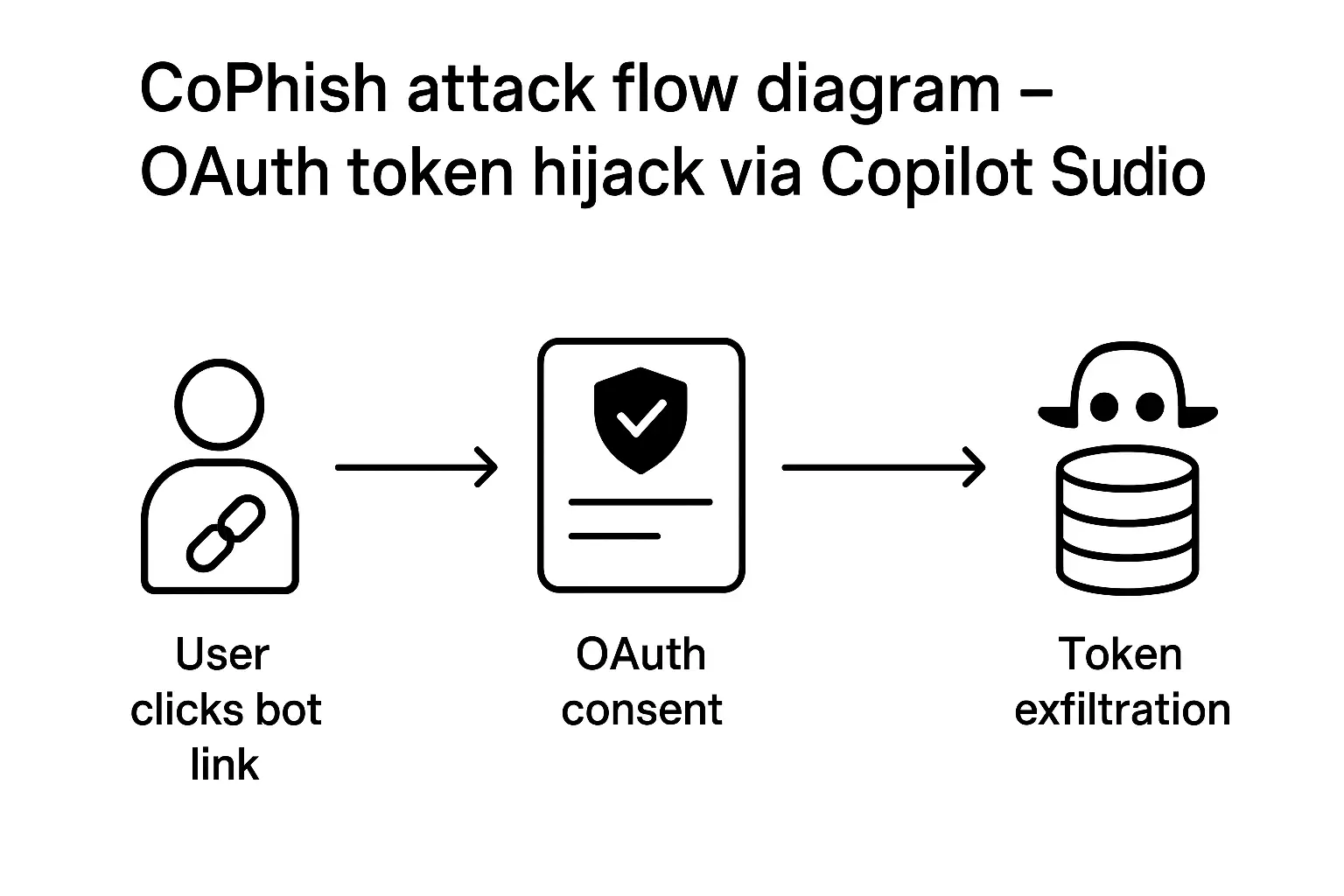

In the so-called CoPhish attack, threat actors exploit Copilot Studio’s low-code agent framework to carry out OAuth consent phishing leading to full token hijacking and data exfiltration. In other words: trust in Copilot Studio becomes the attacker’s advantage.

Why does this matter? Because OAuth tokens are like keys to a castle. Once stolen, attackers can act as the user reading emails, accessing files, sending on behalf, or creating persistence. That puts organisations’ identity systems, cloud data and trust frameworks at risk. Below we’ll walk through how Copilot Studio becomes the conduit for OAuth token theft, what this means for enterprise security, and what you can do to defend your estate.

How the CoPhish Attack Works in Copilot Studio

What is OAuth consent phishing?

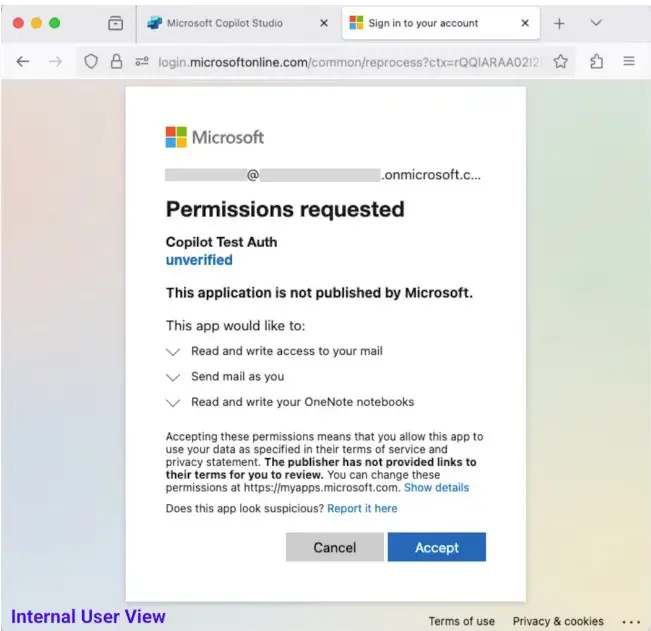

At its heart, OAuth consent phishing is a trick where the attacker gets you to grant permissions to an app you didn’t fully understand. Under the model of Microsoft Entra ID or similar identity systems, a malicious app asks “Do you consent to share X, Y, Z permissions?” If you say yes, you end up issuing an access token. That token gives the attacker the same rights the app holds so if it asked for Mail.ReadWrite, it can read and send your mail

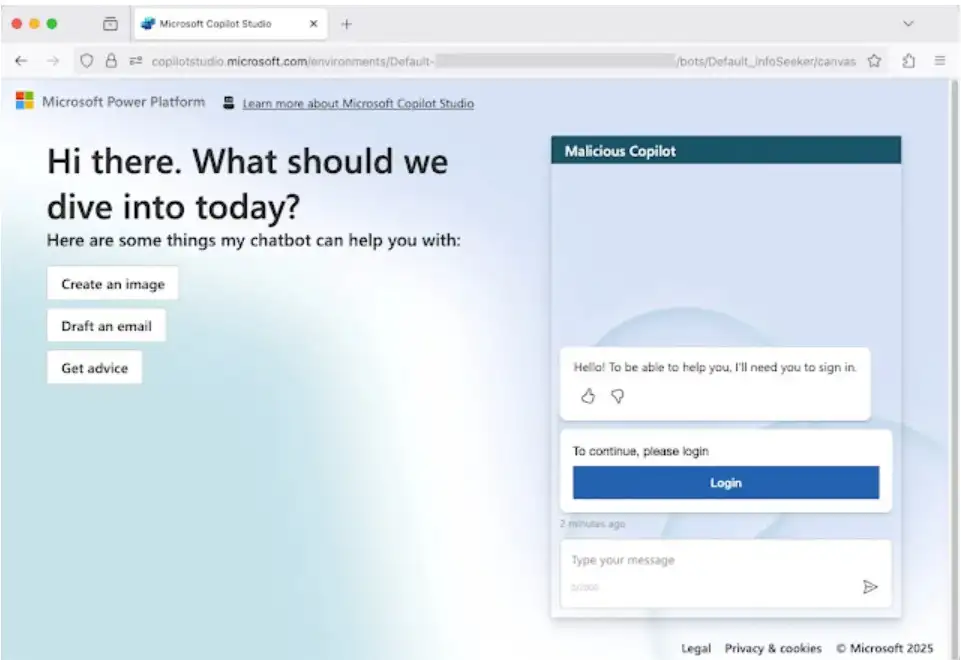

The role of Copilot Studio agents

Copilot Studio allows creation of chat-bots (or “agents”) built on a bona-fide Microsoft domain (e.g., copilotstudio.microsoft.com). The user interface feels legitimate. The key attack vector: an attacker sets up a malicious agent and configures its “Login” topic to redirect the user’s consent to a malicious OAuth application and then quietly exfiltrate the token.

Step-by-step: How CoPhish plays out

1. The attacker builds an agent in Copilot Studio (in their own tenant or compromised one) and enables the demo website, which gives a link like

https://copilotstudio.microsoft.com/environments/Default-{tenant-id}/bots/Default_{bot-name}/canvas

because it sits on Microsoft’s domain it appears trustworthy.

2. They configure the agent’s sign-in topic: when the user clicks “Login”, the agent calls an OAuth application they control. This application requests scopes (permissions) that appear innocuous or allowed under current policy. For an administrator target, even broad scopes like Files.ReadWrite.All may be requested.

3. The user (targeted via email, Teams message, or other channel) clicks the link, clicks “Login”, consents, and the flow completes via token.botframework.com (a legitimate step in Copilot Studio). Behind the scenes an HTTP request embedded in the system topic sends the User.AccessToken to attacker-controlled infrastructure. Because the token was sent from Microsoft infrastructure, the traffic may look benign in network logs.

4. With the token in hand, the attacker now has the user’s permissions. They can access emails, files, calendars, create new apps, etc., depending on the consent granted. The user is none the wiser they continue to chat with the agent.

Why this is especially dangerous

· The domain (copilotstudio.microsoft.com) is trusted, reducing suspense or suspicion from users.

· Because the token exfiltration happens on Microsoft’s infrastructure, it may bypass usual network-monitoring filters.

· Even with Microsoft’s updated default consent policy (July 2025) which blocks many broad scopes for normal users, adversaries can still target internal users and especially administrators, who can consent to apps outside those blocks.

· The low-code nature of Copilot Studio means many organisations may not have strong governance over agent creation and topic modification, making the platform ripe for abuse.

Real-World Example: CoPhish in Action

Let’s say a mid-sized enterprise uses Microsoft 365 and has started pilots of Copilot Studio for internal automation. A threat actor sets up an agent titled “ExpenseHelperBot” and sends a message to a finance user: “Login here to reconcile your travel expenses.” The link is hosted on copilotstudio.microsoft.com so the user clicks, sees a familiar Microsoft login UI with “Login” button, consents.

Behind the scenes the malicious OAuth app requests Notes.ReadWrite, Mail.ReadWrite, and the user approves. The token is immediately exfiltrated to the attacker’s server. The attacker now sends phishing emails from the user, harvests attachments, and escalates privileges. The victim has no idea.

In another scenario, the attacker targets an Application Administrator. They send a link to a Copilot Studio agent titled “AdminConsoleHelper”. The admin clicks and consents to an external unverified application requesting Application.ReadWrite.All.

Since Application Administrators can consent to apps outside the default user consent policy, the attacker now obtains full read/write privileges over applications letting them persist longer. These scenarios match the publicly reported “Scenario 1” and “Scenario 2” in the Datadog analysis.

The takeaway: even well-managed tenants can be vulnerable, especially via AI/automation tools that aren’t strictly locked down.

Why This Hapens: Root Causes

Several underlying gaps enable this kind of attack:

1. Low-code/agent platforms lack governance: Copilot Studio allows agent creation, editing of system topics (including “sign-in”), and sharing externally. Without strong controls, this becomes a blind spot.

2. OAuth consent policies still have gaps: Microsoft’s default “microsoft-user-default-recommended” consent policy (July 2025) blocks many broad permissions for standard users, but administrators remain exempt and internal apps may still request allowed scopes.

3. Trusted domains reduce suspicion: Using a Microsoft-branded domain for the agent link makes the phishing vector more convincing. Users are less likely to hesitate when they see “copilotstudio.microsoft.com”.

4. Token exfiltration hidden inside legitimate flows: Because the agent triggers the HTTP request from Microsoft infrastructure, security monitoring tools may not flag it as suspicious.

5. Admin-consent remains a high-risk vector: Admins can approve external/unverified apps with broad permissions. Attackers exploiting that can take full control. By understanding these root causes, we can craft better mitigations.

Mitigation & Security Best Practices for Copilot Studio

If your organisation uses Copilot Studio, the time to act is now. Here are recommended steps to secure your environment:

1. Enforce a strong application-consent policy

Don’t rely solely on Microsoft’s defaults. Customize a policy that blocks or requires review for any app requesting high-risk scopes like Mail.ReadWrite, Files.ReadWrite.All, Sites.ReadWrite.All, Application.ReadWrite.All. Track consent logs for unusual activity.

malicious CopilotStudio page. source

Unprivileged internal user.source

2. Restrict agent creation and sharing

Limit the number of users who can create agents in Copilot Studio. Prevent wide external sharing of demo websites unless strictly vetted. Ensure that only trusted developers with least privilege can build and share agents. Monitor for unexpected agent creation events (BotCreate, BotComponentUpdate).

3. Monitor OAuth consent events and agent modifications

Use your SIEM or cloud audit logs to watch for events like: “Consent to application”, “BotCreate”, “BotComponentUpdate”, and modifications to sign-in topics in agents. Flag consent requests from unverified apps or unusual scopes.

4. Educate users and administrators

Ensure non-technical users recognise that even trusted domains can host malicious links. Train admins to scrutinise applications they are asked to approve. Simulate phishing scenarios with Copilot-style lures. Combine education with technical controls.

5. Revoke compromised OAuth tokens swiftly

If suspicious consent is detected, immediately revoke the token or block the application. Use conditional access and revoke sessions. Review app registrations to remove any malicious or unused ones.

6. Apply least-privilege access model

Even for productivity tools, ensure agents and apps request minimum privileges. Avoid broad scopes where read/write isn’t strictly necessary. Restrict admin roles and apply multi-factor authentication, conditional access and just-in-time elevation for privileged users.

7. Review periodic platform-specific controls

Stay informed of upcoming changes: Microsoft announced further consent-policy updates in late October 2025 which will narrow the gap for standard users. Ensure your environment is prepared for new defaults and any additional safeguards introduced by Microsoft for Copilot Studio.

Detecting a Malicious Agent or OAuth Consent Phishing

How can you spot when someone may be attempting a CoPhish attack in your organisation? Here are key indicators:

· A Copilot Studio agent has a public demo website link that wasn’t approved or logged by your governance process.

· A user receives an unexpected link to a “Microsoft” service (especially in Teams or email), asks them to “Login” before using a bot.

· Audit logs show “Consent to application” event for an external/unverified app, especially requesting high-risk scopes.

· Changes in the bot’s “sign-in” topic or other system topics (e.g., addition of “HTTP Request” action) in a Copilot agent.

· Network traffic shows token-sized strings being sent to unusual endpoints although this may be concealed behind Microsoft traffic.

· Unusual application-registrations in your tenant associated with Copilot Studio-agents or unexpected reply URLs like token.botframework.com/.auth/web/redirect.

If you encounter these signs, take immediate action to suspend the agent, review the app registration, assess exposed permissions, and revoke tokens.

Why Organisations Must Act Now

The convergence of AI-agent platforms, low-code automation, and identity access is a dangerous frontier. Tools like Copilot Studio were designed for productivity but productivity without governance becomes risk.

The CoPhish attack shows how cleverly threat actors can weaponise familiar domains, trusted workflows, and clever redirections. For an organisation, the cost isn’t just the compromised token it’s the downstream activities: compromised mailboxes, data leaks, identity takeover, lateral movement.

One practitioner I spoke with described it this way: "It’s as if you handed the attacker a guest key to your main office but told them it’s only for the coffee machine." The attacker proceeds to walk into executive offices, plant bugs, and exit with sensitive documents. Once the token is in their hands, they already have the door open.

Given the pace of adoption for Copilot-style agents in enterprises, now is the moment to review your governance, identity controls, and user awareness. Waiting until an incident occurs is far costlier than locking down your agent/consent ecosystem now.

Wrap-up

In this era of hybrid cloud, AI agents and identity-centric threats, the phrase Copilot Studio OAuth token exfiltration explained isn’t just click-bait it’s a wake-up call. The CoPhish attack leverages the trusted interface of Copilot Studio agents, twists OAuth consent into a trap, and quietly steals tokens that give persistent access to sensitive data and systems. But the good news is: the defensive steps are clear.

By enforcing robust application consent policies, restricting agent creation/sharing, monitoring audit events, revoking compromised tokens and educating your people, you can greatly reduce risk.

If you control or help secure a Copilot Studio environment, don’t treat this as a theoretical threat. Assume attackers will try this method. Review your policies, hard-audit your agents and app-registrations, and make sure everyone in the enterprise knows: even benign-looking chatbots can carry dangers. The stakes are real. The time to act is now.

Secure your Copilot Studio estate before the next token is stolen.

FAQs

Q1: What is Copilot Studio and how does it relate to OAuth token theft?

Copilot Studio is Microsoft’s low-code platform for building chatbots (agents) that run on copilotstudio.microsoft.com. Attackers can exploit its “sign-in” topic to embed OAuth flows, tricking users into granting permissions to malicious apps, and then exfiltrating the returned tokens.

Q2: What does CoPhish mean and why is it dangerous in the context of Copilot Studio?

CoPhish refers to the new phishing technique that wraps an OAuth consent attack inside a Copilot Studio agent. Because the domain is Microsoft-branded and the interface looks trustworthy, users are more likely to consent. Once the token is stolen, attackers can act as the user with full permissions.

Q3: How can I detect malicious Copilot Studio agents in my organisation?

Look for unapproved demo website links, unexpected agent creation events, unusual “Consent to application” entries in your audit logs, modifications to agent sign-in topics, and OAuth app registrations with high-risk scopes. Monitoring these indicators gives early warning of potential token hijack.

Q4: What are the best practices to prevent OAuth consent phishing in Copilot Studio?

Enforce a strong application-consent policy blocking high-risk permissions, restrict who can create/share agents, monitor agent/audit activity, use least-privilege for apps, require multi-factor authentication especially for admins, and regularly review app-registrations and tokens for compromise.

Q5: If a token is compromised via Copilot Studio, what steps should I take?

Immediately revoke the token or disable the compromised app registration, disable the malicious agent, review what data the token had access to (email, files, etc.), check for lateral movement or unusual activity, enforce password resets or re-authentication if necessary, then review your governance and consent policy to close the gap.

Hoplon Infosec’s Mobile Security service protects organizations from phishing and OAuth token theft by securing mobile access to cloud platforms like Microsoft Copilot Studio.

You can also read these important cyber security news articles on our website.

· Apple Update,

· Windows Fix,

For more Please visit our Homepage and follow us on X (Twitter) and LinkedIn for more cybersecurity news and updates. Stay connected on YouTube, Facebook, and Instagram as well. At Hoplon Infosec, we’re committed to securing your digital world.

Share this :