Mobile App and SDK-Based Security Threats Explained for 2026

Hoplon InfoSec

01 Jan, 2026

Mobile apps are no longer separate. Most of them are put together with a lot of SDKs for things like analytics, ads, crash reporting, payments, and keeping users interested. There is a price to pay for that ease.

In the last few years, security threats that come from mobile apps and SDKs have gone from being a small problem to a big one. App owners now have to deal with a strange mix of real security holes, false alarms, and outright lies.

This article cuts through the noise. It talks about what is known, what is still unknown, and how SDK risks really affect real apps, real users, and real businesses.

Learning About Security Risks in Mobile Apps and SDKs

Mobile app and SDK-based security threats are risks that come from third-party libraries that are built into your app, not your own code. These SDKs usually have the same permissions as the app they are in.

The problem starts with that shared trust model. The app owner is still responsible if the SDK acts up, gets hacked, or gathers more data than it says it will.

The fact that it is invisible makes it dangerous. It's not common to look at SDK code line by line, especially if it comes from a well-known vendor. A lot of mobile SDK security risks stay hidden until a breach, audit, or regulator makes them public.

Why SDKs make mobile apps more vulnerable to attacks

Every SDK makes it easier for hackers to get into mobile apps. It adds new network connections, background services, and data flows that developers may never be able to fully understand.

Analytics SDKs might gather information about devices. Ad SDKs might ask for access to your location. Push notification SDKs might keep connections open all the time. All of these actions could give attackers a way in.

In a number of real-world studies, security holes in embedded SDKs were found not because attackers broke into the app directly, but because the SDK talked to endpoints that weren't secure or had messed-up encryption.

The Problem with the Supply Chain in Mobile Apps

One of the biggest worries in app development right now is the security of the mobile app supply chain. SDK vendors are trusted by developers. Developers are trusted by users. Attackers take advantage of that chain of trust.

A single bad SDK update can break thousands of apps in a single night. This isn't just a theory. Security teams have written down cases where SDKs were updated with new tracking logic or insecure settings without anyone knowing about it.

More and more, people see SDK-based mobile security risks as supply chain risks instead of just bugs.

The Most Common Security Risks That Come with SDKs

To understand risk, you need to know how SDKs fail in the real world. Here are the most common patterns that show up in audits of mobile app security.

Handling Data Insecurely

A lot of third-party SDK flaws have to do with how data is stored or sent. This includes logging in plain text, weak encryption, or caching that isn't safe.

When SDKs gather behavioral data and send it without enough protection, mobile telemetry data exposure often happens. Even if the data looks safe on its own, it can become sensitive when mixed with other sources.

SDK data leaks are especially dangerous when privacy laws are in place, because it doesn't matter what the person meant to do. Just being exposed can lead to penalties.

Too Many Permissions and Silent Abuse

Another common problem is the misuse of SDK permissions. Some SDKs ask for permissions that go beyond what they are supposed to do.

For instance, if an analytics SDK can get exact location data without a clear reason for doing so, that's a red flag. This behavior might not be bad, but it still makes things more dangerous.

Over time, insecure mobile app SDKs can make it normal for too many people to have access, which makes it harder for users and auditors to find real abuse.

Weak Authentication and Hardcoded Secrets

Security teams keep finding hardcoded API keys, tokens, and endpoints in SDKs. Once attackers get this information, they can use it to abuse backend services or pretend to be real apps.

This isn't always a case of careless behavior. Sometimes, SDK vendors put ease of integration ahead of secure design. Sadly, that shortcut helps attackers.

Are the security risks of mobile SDKs real or just blown out of proportion?

This is where things get confusing. Developers often come across scary blog posts or viral threads that say there are huge SDK exploits without any proof.

Some of these claims are what we call "unverified mobile app security claims." They talk about possible behavior without proof of exploitation.

It's also dangerous to call everything fake. Silent SDK abuse doesn't always lead to public incidents or CVE entries.

The truth is in the middle. There are both fake and real reports of mobile app vulnerabilities. The hard part is knowing how to check for mobile security threats before taking action.

How Attackers Use SDK Weaknesses in Real Life

Attackers don't often need to break encryption or undo whole apps. They look for the easiest way to go.

One common strategy is to stop SDK network traffic. If an SDK sends data without certificate pinning, attackers can see or change that traffic on networks that have been hacked.

Another strategy goes after the update systems. If an SDK update process doesn't check for integrity, attackers can add changed code to a lot of apps at once.

These methods make mobile SDK vulnerabilities real attack paths instead of just theoretical risks.

An Optional Example: A Quiet SDK Privacy Incident

A retail app passed all of the store reviews and functional tests a few years ago. There didn't seem to be anything wrong. During a routine security check of mobile apps for SDK risks, analysts saw outbound traffic that they couldn't explain.

The source turned out to be an advertising SDK that was collecting device fingerprints in ways that weren't explained in its documentation. There had been no breach, but the behavior went against privacy rules.

There was no CVE. No public notice. But there was a real risk of not following the rules. This is how SDKs can cause mobile app compliance risk to show up without any headlines.

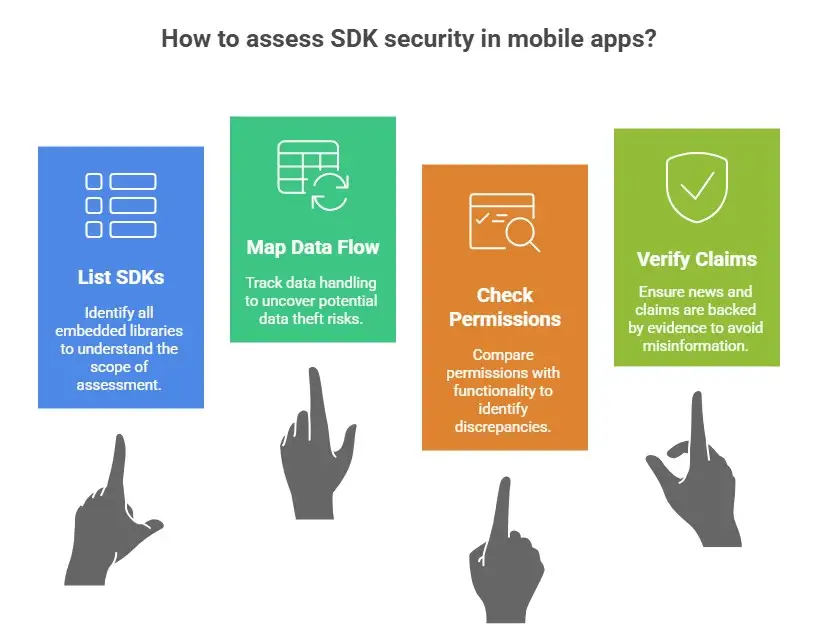

Finding and confirming threats based on SDKs step by step

Step 1: Make a list of all the SDKs.

A lot of teams don't realize how many SDKs are in their apps. Make a list of all the embedded libraries, including any that depend on other libraries.

This list is the basis for figuring out how safe SDKs are in mobile apps.

Step 2: Map the flow of data.

Keep an eye on what data each SDK collects, saves, and sends. Pay close attention to background services, personal data, and identifiers.

This step often shows unexpected ways for mobile apps to steal data.

Step 3: Look at Permissions and Behavior

Check the permissions that were asked for against the documented functionality. Look for things that don't match up and access that isn't explained.

When you do this comparison, SDK tracking and privacy risks often come up.

Step 4: Use Evidence to Back Up Claims

If you see scary news online, ask yourself one question. Is there proof that can be checked?

Write down the uncertainty if no official source backs it up. When you see the phrase "This appears to be unverified or misleading information, and no official sources confirm its authenticity," take it as a sign to look into it calmly, not freak out.

Things That Really Work to Mitigate

Banning SDKs is not the answer to lowering security risks for mobile apps and SDKs. It's about having power over them.

As part of your release cycle, start with penetration testing of third-party SDKs. This finds hidden behaviors before attackers do.

Use runtime monitoring to find SDK activity that isn't normal. Along with regular checks of vendor documentation and updates, do this.

Most importantly, think of SDK-based data leakage prevention as an ongoing process, not something you do once.

Legal and Compliance Pressure You Can't Ignore

More and more, regulators see SDK misuse as the app owner's fault. It doesn't matter if a third party caused the problem for GDPR and CCPA.

Mobile app supply chain security failures have already led to fines and forced disclosures.

This fact alone makes security threats based on mobile apps and SDKs a concern for the board, not just for techies.

Questions and Answers

Is it safe to use third-party SDKs in mobile apps?

Some are safe, but others put you at risk. Design, configuration, and ongoing monitoring all play a role in safety.

Can SDKs give away user information?

Yes. There are a lot of reports about the risks of SDK data leaks, especially with analytics and ad libraries.

How do hackers use mobile SDKs to their advantage?

They go after weak network security, too many permissions, and unsafe ways to update.

How do you check SDKs in Android and iOS apps?

As part of a structured mobile app security audit for SDK risks, use static analysis, runtime testing, and network inspection.

Final Thoughts

These days, mobile apps are part of ecosystems, not just separate products. No matter if you know it or not, every SDK you add to your project becomes part of your security story.

Not all security threats that come from mobile apps and SDKs are loud or dramatic. They are often quiet, subtle, and found too late. The smartest teams don't wait for news stories or posts that are full of panic to act.

You can also read these important cybersecurity news articles on our website.

· Apple Update,

Share this :